In Solana trading, milliseconds separate winners from spectators. Your bot's strategy might be brilliant, but if your RPC infrastructure adds 200ms of latency, you're essentially trading yesterday's prices. Over 70% of sniper bots focus on reducing RPC latency to under 50ms for faster transaction sends, yet only about 10% deliver steady profits, often because infrastructure kills their edge before strategy gets a chance.

The game changed in 2025-2026. Public RPC endpoints filter, rate-limit, and de-prioritize your transactions during congestion. Meanwhile, sophisticated traders run dedicated nodes with Jito ShredStream, stake-weighted QoS, and validator co-location, receiving block data ~2 minutes earlier on average compared to standard methods. This isn't about minor optimizations anymore. It's about whether your transactions even reach the leader validator during peak activity.

This guide breaks down the technical infrastructure that actually works in 2026: from choosing between Yellowstone gRPC and ShredStream feeds to configuring stake-weighted QoS for guaranteed leader access.

Public RPC endpoints are convenient for testing, but they're dead for production HFT. Public RPC endpoints are often monitored using broad logging with payload details, rate-limited, and throttled during volatility. When network activity spikes, your transactions get dropped while dedicated node operators sail through.

Here's what serious infrastructure looks like in 2026:

Performance targets: Sub-4ms latency via dedicated nodes in nearest locations with optimized configs and custom RPC tuning. Compare this to public RPCs that often deliver 50-200ms response times during congestion. Your landing rate should hit 99%+ with propagation times under 100ms and a 99 percent transaction landing rate.

Should you run an RPC node, a full validator, or both? The answer depends on your transaction volume and capital.

The hybrid approach wins for most HFT operations: Run a dedicated RPC node and establish a stake-weighted QoS peering relationship with a trusted validator. RPC operators can use the --rpc-send-transaction-tpu-peer flag to forward transactions to a specific leader through staked connections Helius. You get validator-grade priority without running consensus infrastructure yourself.

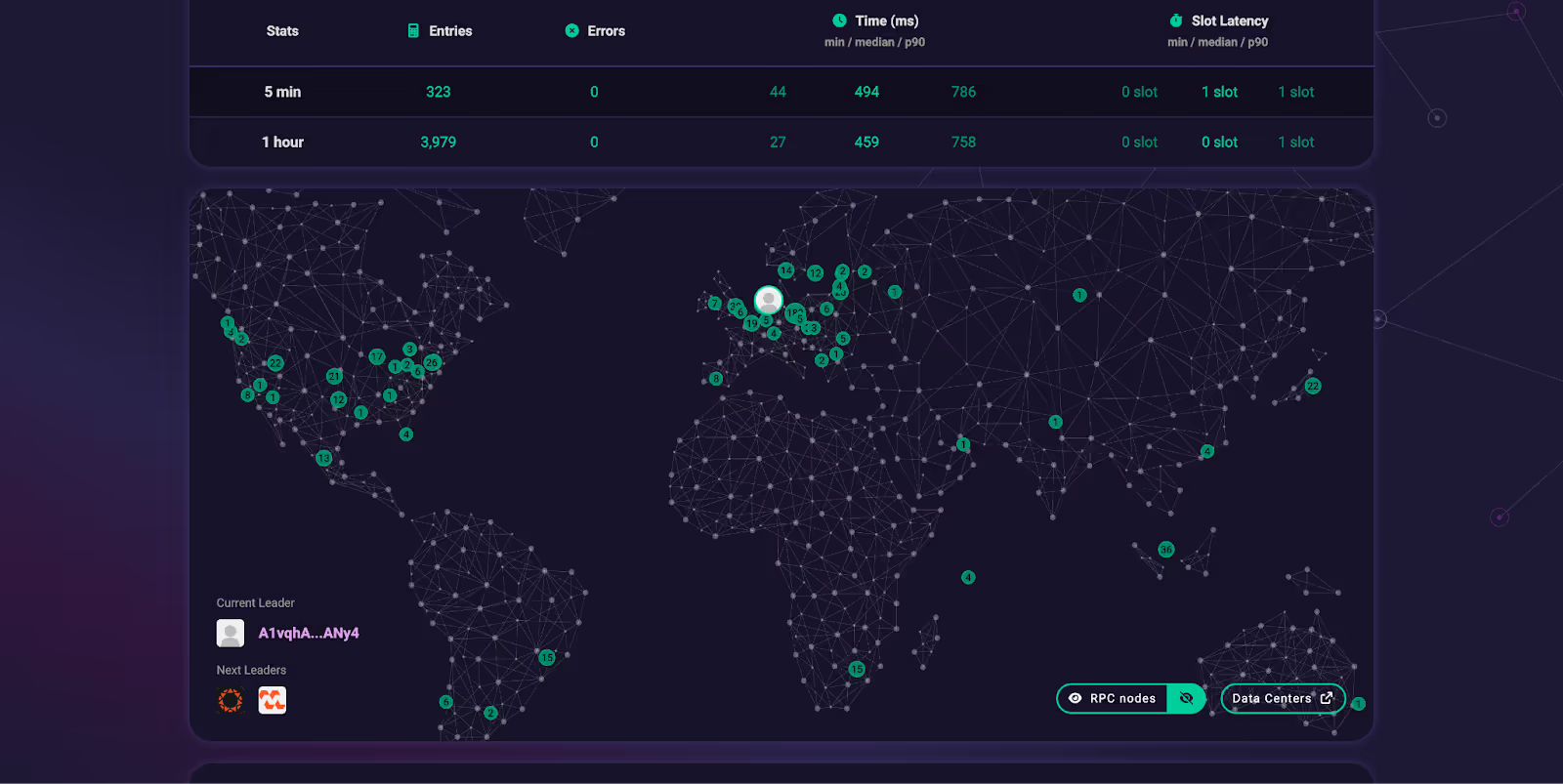

Single-region deployments create single points of failure. Reliable providers invest in geographically distributed clusters, often across multiple cloud regions or bare-metal data centers RPC Fast. Network partitions happen. Datacenter power fails. Your infrastructure needs to survive these events without missing trades.

Primary regions for Solana HFT in 2026:

Multi-region doesn't mean running three identical setups. Deploy your primary trading node in the region closest to your target DEXs and validator partners. Use secondary regions for failover and data redundancy, not active trading (unless latency is comparable).

Benchmarking is mandatory before production. Run at least a 7-14 day benchmark from the regions where you host trading infra, with a realistic mix of reads and writes. Plot p50/p95/p99 across calm and volatile hours. Don't trust single-day tests or vendor marketing claims. Network performance varies dramatically during token launches, airdrops, and major market moves. Your infrastructure needs to perform when it matters most, not just during idle periods.

Implement automatic endpoint switching with health checks every 5-10 seconds. If your primary node shows slot lag >2 or response times >100ms, your bot should immediately route to backup endpoints. This requires maintaining warm connections to multiple nodes simultaneously, not discovering the failure after 30 seconds of timeouts.

Getting blockchain data fast enough matters more than your trading logic. Solana offers multiple data ingestion methods, each with different latency profiles and complexity trade-offs.

The default method most developers start with, and usually the bottleneck that kills their bot's performance.

Core methods:

accountSubscribe - monitor specific account changeslogsSubscribe - capture program execution logsslotSubscribe - track slot progressionblockSubscribe - receive full block dataThe latency problem: If your bot is reading pool changes, swap instructions, or token listings from a public RPC, you're already late. You're getting filtered, rate-limited, and de-prioritized during congestion. Standard WebSockets typically deliver data 150-300ms behind the actual on-chain event.

When to use it: Honestly, only for non-critical monitoring or as a fallback mechanism. If your strategy depends on being first, standard RPC subscriptions will cost you money. They're fine for portfolio tracking, historical analysis, or low-frequency trading where 200ms doesn't matter.

Configuration example:

const connection = new Connection(RPC_ENDPOINT);

connection.onSlotChange((slotInfo) => {

// Already 200ms behind by the time you see this

console.log('Current slot:', slotInfo.slot);

});Reserve standard RPC for reliability checks and redundancy, not primary data ingestion.

The first major upgrade path for serious bots. Yellowstone streams data directly from the validator using efficient binary protocols instead of text-based JSON.

Key advantages:

Latency improvement: Roughly 50-100ms faster than standard RPC subscriptions. Yellowstone gRPC removes wasted traffic using filters and mechanisms like account data slicing. Instead of receiving every transaction and filtering client-side, you tell the server exactly what you want.

The from_slot advantage: Shyft gRPC nodes enable you to stream data from historical slots. If your stream broke at slot X, you can reconnect from Slot X by mentioning the from_slot parameter. This prevents data gaps during network hiccups or node restarts.

Practical setup for DEX monitoring:

# Subscribe only to Raydium pool updates

filters = [

SubscribeRequestFilterAccounts(

account=["RAYDIUM_PROGRAM_ID"],

filters=[

SubscribeRequestFilterAccountsFilter(

memcmp=MemcmpFilter(offset=0, bytes=b"pool_state")

)

]

)

]ShredStream integration: Chainstack Solana nodes have Jito ShredStream enabled by default, providing significantly enhanced performance for Yellowstone gRPC Geyser streaming. Many providers now bundle ShredStream acceleration into their Yellowstone offerings, giving you better consistency without extra configuration.

The performance king for HFT operations. ShredStream delivers data before the Solana node finishes processing it.

Shreds are fragments of data used in the Solana blockchain to represent parts of transactions before they are assembled into a full block. Jito's service streams these shreds directly from block leaders, bypassing the normal Turbine gossip propagation.

Using direct gRPC subscription to Jito Shredstream endpoint, you will receive transactions ~2 minutes earlier on average compared to Yellowstone gRPC, receive even more transactions including failed and votes, and avoid time-consuming replay of transactions by RPC node.

Let that sink in. Two minutes. In HFT terms, that's multiple lifetimes.

What you gain:

The proxy client connects to the Jito Block Engine and authenticates using the provided keypair. It sends a heartbeat to keep shreds flowing and distributes received shreds to all destination IP ports.

Basic setup flow:

# Run Jito ShredStream proxy

RUST_LOG=info cargo run --release --bin jito-shredstream-proxy -- \

shredstream \

--block-engine-url https://mainnet.block-engine.jito.wtf \

--auth-keypair my_keypair.json \

--desired-regions amsterdam,ny \

--dest-ip-ports 127.0.0.1:8001Jito Shredstream gRPC endpoint is bundled as a free add-on to RPC Fast Solana dedicated node offering. Most premium providers include ShredStream access rather than charging separately.

Raw shreds arrive encoded. You'll need protobuf definitions from Jito's mev-protos repository and either build your own decoder or use reference implementations. The complexity is worth it. When you're competing against other sniper bots, receiving data 100-150ms earlier means you land transactions in earlier blocks.

bloXroute's alternative approach using their Blockchain Distribution Network (BDN) to accelerate shred delivery globally.

bloXroute Solana BDN sends and delivers shreds fast with expected latency improvements of 30-50ms. Instead of relying on Solana's native Turbine protocol alone, OFRs leverage bloXroute's BDN to intelligently route and prioritize shred delivery using advanced networking techniques including optimized relay topologies and parallelized data distribution.

Early testing shows an average of 100-200 milliseconds of latency improvement over public Solana RPC nodes, and 30-50ms improvement over local nodes running with Solana Geyser. Sounds impressive, but real-world testing tells a different story.

According to our tests, Jito performs better than bloXroute OFR (as of summer 2025). Multiple benchmark reports from infrastructure providers show Jito ShredStream consistently delivering faster updates with better reliability.

When bloXroute makes sense:

bloXroute offers TX streamer for real-time gRPC transaction feed, or you can parse raw shreds directly from their OFR client. The Gateway client runs as Docker container or binary on your infrastructure.

Most HFT shops in 2026 treat bloXroute as backup.

The winning approach combines multiple feeds intelligently rather than betting everything on one source.

Run Jito ShredStream and bloXroute OFR simultaneously, pushing shreds to your Solana node from both sources. A smart routing system automatically uses whichever feed delivers first, with all data feeders pushing shreds to the Solana node in addition to p2p Turbine, and the node uses the data that arrived fastest.

There has been a trend to replace the "Solana node + Yellowstone gRPC" pair with Jito ShredStream gRPC, so as not to wait for the Solana node. Instead of streaming from your node after it processes data, consume directly from ShredStream and skip node latency entirely.

Trade-offs to consider:

Maintain long-lived gRPC streams with explicit keepalive patterns. The Yellowstone client supports ping for idle connection survival behind load balancers. Handle disconnections gracefully using from_slot replay to avoid missing critical data during reconnection windows.

The biggest cost drivers are not per-request pricing but engineering hours, backfills, and wasted bandwidth. A single well-configured ShredStream connection often outperforms five standard RPC subscriptions while consuming less bandwidth and requiring simpler code.

Choose your feeds based on measured performance in your specific deployment environment, not theoretical benchmarks. Run A/B tests measuring actual transaction landing success rates and time-to-detection for opportunities your bot targets.

Receiving data fast means nothing if your transactions sit in queues or get dropped. Solana's transaction execution layer requires understanding fee mechanics, compute optimization, and direct validator communication.

Solana's fee structure has two components working together to determine if and when your transaction gets processed.

Every transaction costs 5000 lamports per included signature, paid by the first signer on the transaction. At $100 per SOL, that's $0.0005 per transaction. This base fee is split 50/50 between burning (removing from supply) and paying the validator who processed it. Simple, fixed, and non-negotiable.

Priority fees work differently and determine your actual queue position. Priority fees are the product of a transaction's compute budget and its compute unit price measured in micro-lamports: priorityFees = computeBudget * computeUnitPrice. Think of it as bidding for faster processing during network congestion.

Validators use a specific prioritization logic here. The fee priority of a transaction is determined by the number of compute units it requests. The more compute units a transaction requests, the higher the fee it'll have to pay to maintain its priority in the transaction queue. This prevents spam from computationally heavy transactions.

Here's how the calculation works in practice. You set a Compute Unit Limit (how many CUs your transaction needs, max 1.4M per transaction) and a Compute Unit Price (micro-lamports you'll pay per CU). Multiply them together and you get your total priority fee.

Never hardcode your priority fees. The getRecentPrioritizationFees method provides real-time insights into recent priority fee data on Solana, helping you dynamically gauge how much extra fee others are paying. It returns priority fees from the last 150 blocks that successfully landed transactions, giving you a baseline for current network conditions.

Specialized APIs provide even better estimation. Helius offers a Priority Fee API that does additional calculations to provide better priority fee estimates with six priority levels to ensure fast confirmation and cost efficiency. During normal conditions, use lower priority levels. During launches or airdrops, crank them up.

Example using Helius API:

const response = await fetch('https://api.helius.xyz/v1/priority-fee', {

method: 'POST',

body: JSON.stringify({

transaction: serializedTx,

options: { priorityLevel: 'high' }

})

});

// Returns recommended fee in micro-lamportsHere's the reality check though. Priority Fees minimally affect landing times on their own. Priority fees help during moderate congestion but won't save you during extreme network stress. They determine ordering within your lane, not whether you get a lane at all.

Paying for unused compute units wastes money at scale. When you're sending thousands of transactions daily, optimization compounds into significant savings.

Most developers fall into the default trap. By default, each instruction is allocated 200,000 CUs and each transaction is allocated 1.4 million CUs. Most transactions use far less than this, but here's the problem: you pay based on what you request, not what you consume.

The math reveals the waste quickly. The priority fee is determined by the requested compute unit limit, not the actual number of compute units used. If you set a compute unit limit that's too high or use the default amount, you may pay for unused compute units.

Let's say your actual transaction uses 50,000 CUs but you request the default 200,000 CUs. You're paying 4x more in priority fees than necessary. Across 10,000 transactions per day, this compounds into serious capital inefficiency.

Simulation solves this problem cleanly. To calculate the appropriate CU limit for your transaction, we recommend simulating the transaction first to estimate required CU units.

The proper workflow looks like this:

/* 1. Simulate to find actual usage */

const simulation = await connection.simulateTransaction(transaction);

const unitsConsumed = simulation.value.unitsConsumed;

/* 2. Add 10–20% buffer for safety */

const optimalLimit = Math.ceil(unitsConsumed * 1.15);

/* 3. Set precise compute budget */

const computeLimitIx = ComputeBudgetProgram.setComputeUnitLimit({

units: optimalLimit

});

/* 4. Set your price per unit */

const computePriceIx = ComputeBudgetProgram.setComputeUnitPrice({

microLamports: priorityFeePerCU

});

/* Add both to your transaction */

transaction.add(computeLimitIx, computePriceIx);Run simulations during development and cache typical CU consumption patterns for your common operations. Swap transactions might use 80K CUs, liquidity additions 150K CUs, complex arbitrage routes 200K CUs. Build a lookup table and adjust dynamically based on transaction complexity rather than recalculating every time.

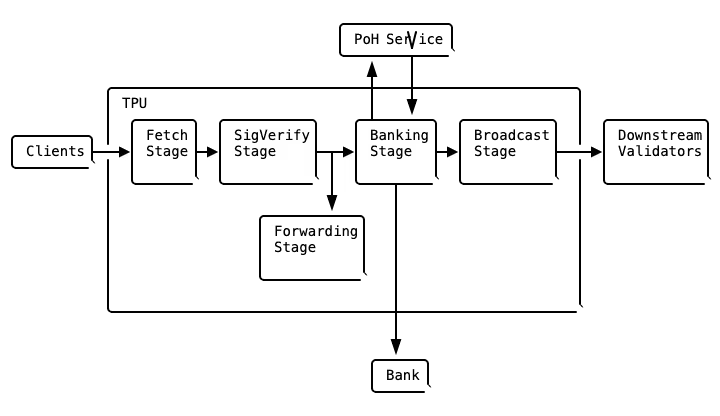

Understanding how transactions reach validators separates amateur bots from professional infrastructure.

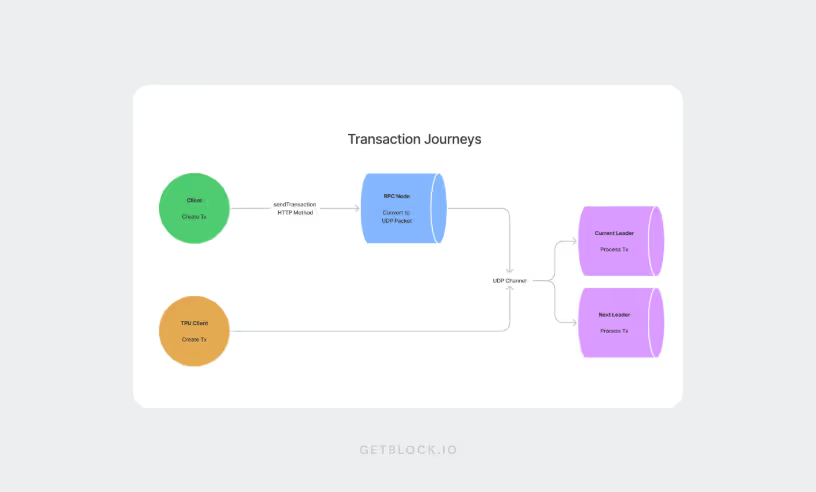

The standard path goes through RPC intermediaries. Users create transactions and submit them to RPC nodes via the JSON RPC API. These nodes act as intermediaries between users and Solana's validators, routing transactions directly to the current leader's TPU.

QUIC protocol fundamentally changed this architecture in recent Solana versions. Connections made to a leader are now made via QUIC. Since QUIC requires a handshake, limits can be placed on an actor's traffic so the network can focus on processing genuine transactions while filtering out spam. This replaced the old UDP-based system that was vulnerable to spam attacks.

The standard RPC relay flow works like this. Your bot calls sendTransaction() on an RPC endpoint. The RPC node validates transaction format, then forwards to the current leader's TPU via QUIC. The leader queues the transaction for processing according to priority fees and stake-weighted QoS rules.

Advanced setups can skip the RPC middleman entirely. Connect directly to leader validators' TPU ports, which requires tracking the leader schedule and maintaining QUIC connections. This eliminates RPC relay latency but adds significant infrastructure complexity.

The complexity trade-off is real. Direct TPU saves maybe 10-20ms but requires significantly more infrastructure code. Most teams get better ROI from optimizing other parts of the stack first. You need robust leader schedule tracking, QUIC connection management, and failover logic when leaders rotate every 4 slots (1.6 seconds).

Single endpoint submission creates a single point of failure. Network conditions vary, RPC nodes have hiccups, and leaders might be unreachable from certain paths.

Parallel submission dramatically increases reliability. Broadcasting a single signed transaction to multiple RPC/relay endpoints in parallel increases the probability that at least one path delivers it quickly to the current leader. Don't wait for the first attempt to fail before trying alternatives. Send simultaneously and let the fastest path win.

Three to five endpoints strikes the right balance. More than that creates diminishing returns and wastes bandwidth. Less than three leaves you vulnerable to single-node failures. The optimal mix combines different strengths: geographic regions (US and EU endpoints), different providers (Helius, Triton, your dedicated node), specialized relays like Jito Block Engine for MEV protection, and one connection to a high-uptime fallback.

Jito Block Engine deserves special attention here. Jito Low Latency Transaction Send or Jito Block Engine transaction submission provides direct, priority transaction submission with bundle support for MEV-aware trading. This isn't just another endpoint. It's a specialized relay that validators running Jito-Solana client prioritize, giving your transactions better treatment.

Implementation pattern:

const endpoints = [

{ url: DEDICATED_NODE_URL, name: 'primary' },

{ url: HELIUS_RPC_URL, name: 'helius' },

{ url: JITO_BLOCK_ENGINE_URL, name: 'jito' },

{ url: TRITON_RPC_URL, name: 'triton' }

];

// Send to all simultaneously

const promises = endpoints.map(endpoint =>

sendTransactionWithRetry(transaction, endpoint)

);

// Wait for first success, but track all responses

const result = await Promise.race(promises);Smart failover logic monitors endpoint health continuously. Track response latency (p95 should stay under 100ms), success rate (target >95% landing), and slot freshness (within 1-2 slots of network). If an endpoint degrades, automatically reduce its priority in your rotation until health improves. Keep monitoring all endpoints even when not actively using them, so you know which backups are ready when needed.

Cost implications matter for metered services. Some providers charge per request, so sending the same transaction to 5 endpoints means 5x API costs. For dedicated nodes with unlimited requests, this doesn't matter. For metered services, factor this into your economics. The trade-off is usually worth it though. Better to pay 5x API costs and land the trade than save money and miss the opportunity entirely.

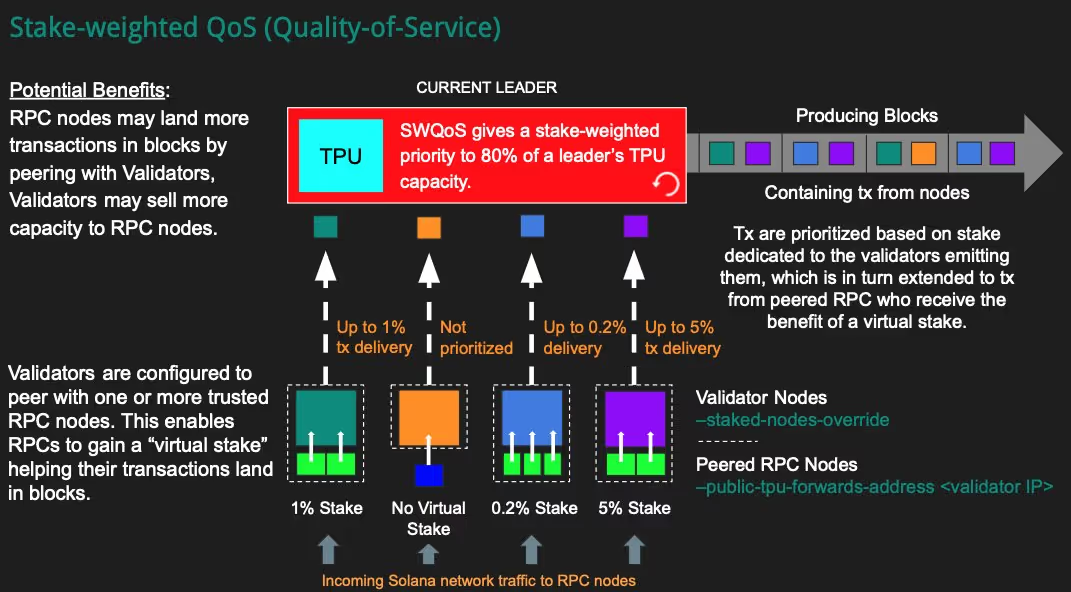

Stake-weighted Quality of Service is the difference between your transactions reaching the leader during congestion and sitting in dropped packet hell. Think of it as a VIP lane where access is determined by validator stake rather than who shouts loudest.

The core mechanism is beautifully simple in concept. Stake-weighted QoS allows leaders (block producers) to identify and prioritize transactions proxied through a staked validator as an additional sybil resistance mechanism. Given that Solana is proof-of-stake, extending stake utility to transaction prioritization makes natural sense.

The allocation model provides guaranteed bandwidth. Today, Stake-weighted QoS gives a stake-weighted priority to 80% of a leader's TPU capacity. If a validator holds 1% of network stake, it gets to transmit up to 1% of packets to the leader. No amount of spam from unstaked nodes can wash out these reserved channels.

RPC nodes gain access through validator partnerships. RPC nodes are unstaked, non-voting, and therefore non-consensus. To use staked connections, RPC operators must use the --rpc-send-transaction-tpu-peer flag. The validator extends "virtual stake" to trusted RPC partners, allowing their transactions to ride the validator's priority allocation.

QUIC protocol makes this enforcement possible. Connections made to a leader are now made via QUIC. Since QUIC requires a handshake, limits can be placed on an actor's traffic so the network can focus on processing genuine transactions. The handshake identifies which stake-weighted connection you're coming from.

Setting up SWQoS requires coordination between validator and RPC nodes. Both sides need specific configuration flags and file formats.

Validator side configuration:

Validators must define which RPC nodes receive virtual stake by using the --staked-nodes-overrides flag. This tells the validator to treat certain RPC nodes as if they have specific stake amounts.

The overrides file format:

{

"staked_map_id": {

"IDENTITYaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa1": 150000000000000,

"IDENTITYbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbb2": 150000000000000

}

}Remember that 1 SOL equals 1 billion lamports. These virtual stake amounts don't move real SOL but affect transaction priority allocation.

RPC side configuration:

RPC operators must use the --rpc-send-transaction-tpu-peer flag, which requires the IP and port of the TPU of the staked validator. The TPU port is typically the dynamic port range plus three, discoverable through Gossip.

Startup command example:

solana-validator \

--rpc-send-transaction-tpu-peer 192.168.1.100:8009 \

# other flags...Version requirements and verification:

You need Agave client v1.17.28 or later on the RPC side to support the necessary flag. RPC operators should check their logs for entries like solana_quic_client and warm to verify their connection. These log entries confirm the QUIC connection successfully established with the validator's TPU.

The performance difference is dramatic, not marginal. 83% first-block hit rate thanks to stake-weighted QoS, with 83% of transactions confirming in the first block, outperforming standard RPCs. Compare that to sub-40% first-block rates without SWQoS during congestion.

Latency improvements compound with other optimizations. swQoS is the most effective at reducing latency for all transaction types, beating both priority fees and Jito tips in isolation. The mechanism guarantees your transaction reaches the leader, while priority fees only affect ordering once transactions arrive.

The advantage becomes critical during network stress. When token launches or airdrops flood the network, non-SWQoS transactions simply get dropped before reaching the leader. SWQoS connections maintain throughput because they have reserved bandwidth that spam cannot consume.

Partnership requirements create barriers to entry. These agreements must be made directly between RPC operators and Validators and include the implementation of the steps captured below to complete the peering. You can't just configure SWQoS unilaterally. Both parties must trust each other and coordinate setup.

Who benefits most:

Provider options for 2026:

Major infrastructure providers offer SWQoS access through existing validator partnerships:

The DIY approach works for large operations. Run your own validator with sufficient stake (minimum 5,000 SOL to be competitive) plus your RPC infrastructure. Exchanges who host their own validator nodes and RPC nodes on the same infrastructure will be able to enable the feature internally, comfortable that the RPC nodes running on their own infrastructure can be trusted.

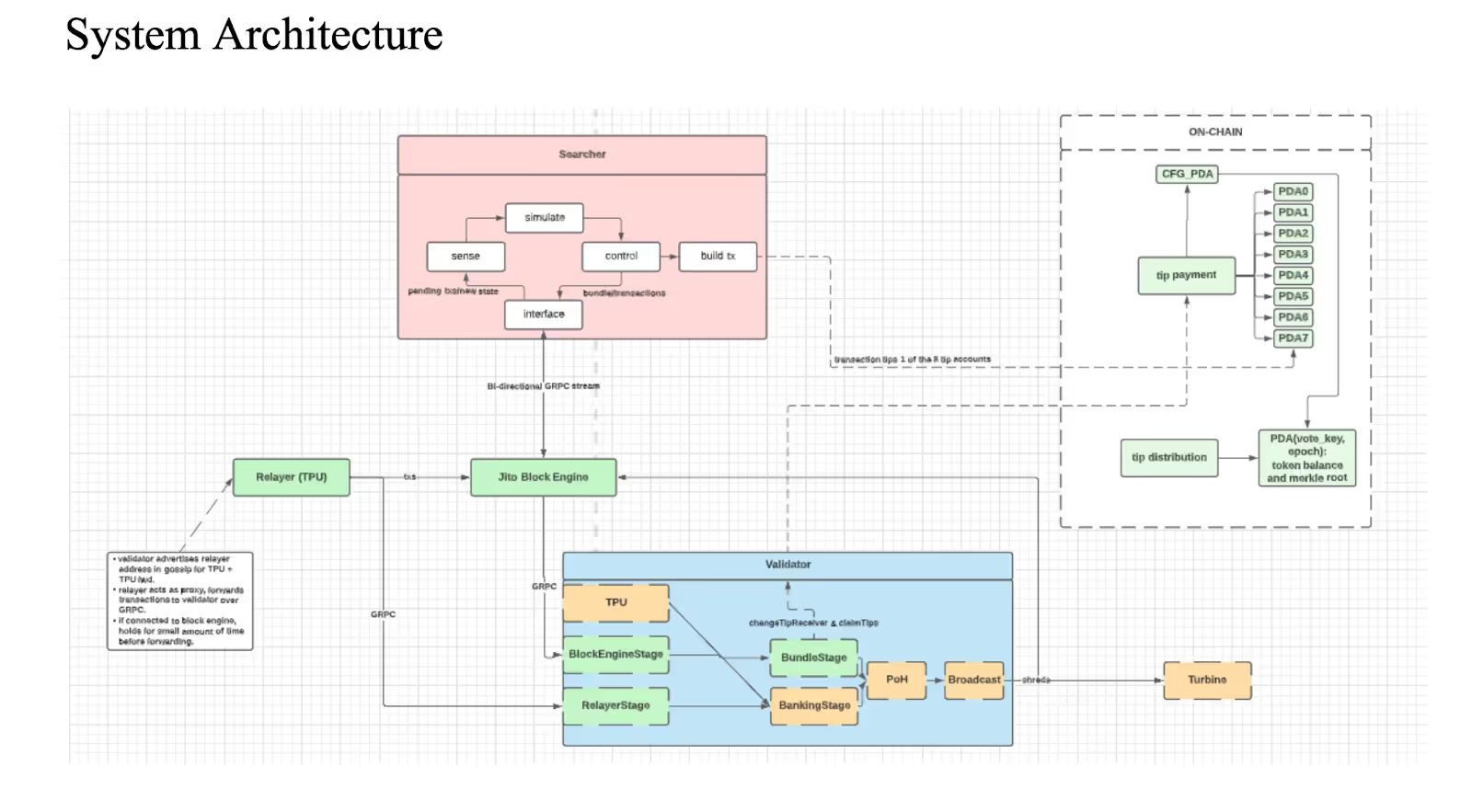

MEV (Maximal Extractable Value) on Solana works differently than Ethereum, but the infrastructure for capturing it has matured significantly through Jito's ecosystem.

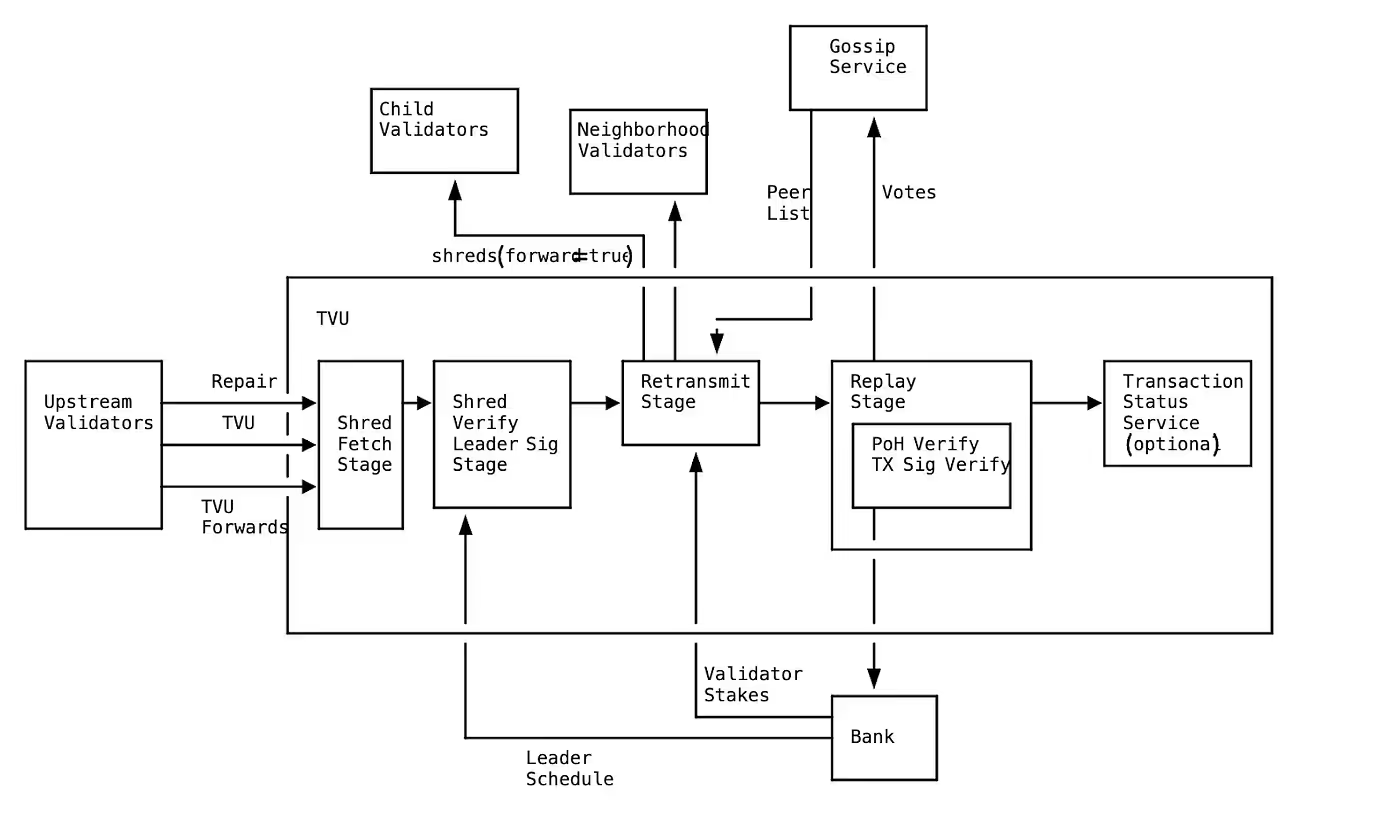

Below is a high-level diagram of the Jito system architecture. It shows how user transactions flow into the network, how Jito's off-chain components (Relayer, Block Engine) handle them:

Jito transforms how sophisticated traders submit transactions. Bundles offer MEV protection, fast transaction landing, support for multiple transactions, and revert protection, making it ideal for developers of Telegram bots and automated trading systems.

The bundle mechanism ensures atomicity. Multiple transactions either all execute in order or none execute at all. This prevents partial fills on complex arbitrage routes or situations where your first transaction succeeds but the profitable second one fails.

Key API methods:

sendBundle - submit transaction bundles with tipssimulateBundle - test bundle execution before sendinggetBundleStatuses - track bundle inclusion across slotsValidators running Jito-Solana client receive bundles through a separate pathway. Jito's fast transaction sending is essential for any Solana MEV user requiring rapid and reliable transaction execution. The Block Engine routes your bundle to validators who opt into processing Jito bundles, currently representing significant network stake.

Multi-step strategies require atomic execution. Arbitrage across three DEXs only profits if all three swaps execute. Land the first two but fail the third, and you're left holding inventory at a loss.

Bundle composition for arbitrage:

const bundle = [

swapOnRaydiumTx,

swapOnOrcaTx,

swapBackOnRaydiumTx

];

// All three execute atomically or none execute

await jitoClient.sendBundle(bundle, {

tipLamports: calculateOptimalTip(expectedProfit)

});Tip calculation balances cost versus inclusion probability. Tips go directly to validators as incentive for priority bundle processing. During moderate activity, 0.001-0.01 SOL tips work. During extreme congestion or high-value opportunities, tips can exceed 0.1 SOL.

Timing considerations:

Send bundles 50-100ms before slot transitions. Jito validators collect bundles during a slot and execute profitable ones in their next leader slot. Earlier submission gives validators more time to simulate and prioritize your bundle.

Revert protection matters for capital preservation. Standard transactions can fail after execution, consuming fees and priority payments. Jito bundles revert entirely if any transaction fails, preventing loss from partial execution. You only pay tips when the entire bundle succeeds.

The combination creates a closed detection-to-execution loop. ShredStream ensures minimal latency for receiving shreds, which can save hundreds of milliseconds during trading on Solana, critical for high-frequency trading environments.

The workflow maximizes edge at every stage:

This architecture explains why top-performing sniper bots achieve 70-80% win rates. They see opportunities first through ShredStream, execute atomically through bundles, and bypass competition by landing in earlier blocks.

MEV extraction beyond sniping:

The key insight is combining data speed (ShredStream) with execution guarantees (bundles). Having one without the other leaves money on the table. See opportunities late but execute well, and someone faster beats you. See opportunities early but execute without atomicity, and you take unnecessary execution risk.

Infrastructure means nothing if you can't measure whether it's actually performing. Continuous monitoring separates teams that think their setup is fast from teams that know their exact performance envelope.

Transaction landing metrics define success:

Latency under 4ms achieved with dedicated nodes in optimal regions, custom-tuned configs, and low-latency RPC paths. Your p50 should stay under 10ms, p95 under 50ms, and p99 under 100ms. Anything higher indicates network issues or suboptimal routing.

Slot synchronization matters for timing:

Propagation times under 100 ms and a 99 percent transaction landing rate sets the baseline. Target 99.99% uptime, which allows only 52 minutes of downtime per year. Anything less means missing trades during critical market events.

Never deploy without comprehensive testing across realistic conditions. Run at least a 7-14 day benchmark from the regions where you host trading infra, with a realistic mix of reads and writes. Plot p50/p95/p99 across calm and volatile hours.

Single-day tests lie. Network performance varies dramatically between quiet Tuesday mornings and Saturday night token launches. Your infrastructure needs to perform when volume spikes 100x, not just during idle periods.

Peak congestion events to test:

Deploy test bots in all target regions simultaneously. Send identical transactions from US, EU, and Asia nodes. Measure which regions consistently deliver better landing rates and lower latency to specific DEX programs. Geography matters more than most teams expect.

Compare your dedicated infrastructure against public RPC baselines. Run A/B tests where 50% of transactions use your optimized setup and 50% use standard public RPC. The performance delta justifies infrastructure investment when measured empirically.

Observability requires purpose-built tooling, not just general server monitoring.

Prometheus exporters capture RPC-specific metrics:

Build separate dashboards for different operational needs. Trading operations wants transaction landing rates and profit/loss. Infrastructure teams need slot synchronization and endpoint health. Management wants uptime and cost efficiency.

Critical visualizations:

Alert thresholds that matter:

PagerDuty or similar incident management integrates with your monitoring stack. During high-value trading opportunities, you can't afford to discover infrastructure problems after missing trades.

Speed without security creates bigger problems than slow infrastructure.

Dedicated HFT nodes are deployed as single-tenant machines with private networking, optional IP whitelisting, and firewalling. Never share infrastructure with untrusted parties. Your transaction patterns and strategy timing leak through shared resources.

Access control layers:

Hardware security modules (HSM) store private keys for high-value wallets. YubiKey or Ledger devices work for smaller operations. Never store plaintext private keys in environment variables or config files on production servers.

Separate hot wallets (active trading, smaller balances) from cold wallets (reserves, larger balances). Automated transfer scripts replenish hot wallets as needed without exposing full capital to infrastructure compromise.

Implement your own rate limiting before infrastructure bottlenecks force it. Control transaction submission rates based on account nonce management and recent blockhash expiration (transactions invalid after ~60 seconds).

DDoS protection and incident response:

Work with providers offering built-in DDoS mitigation. Each node is protected with optional IP whitelisting, firewalls, or private VPN tunnels. Your RPC endpoints will be discovered by competitors and potentially attacked. Plan for this scenario rather than hoping it doesn't happen.

Audit logging captures every action. Log all transaction attempts, failures, latency anomalies, and configuration changes. When something goes wrong at 3 AM during a profitable market move, logs determine whether the issue was infrastructure, strategy, or external attack.

Understanding total cost of ownership prevents surprises after deployment.

Dedicated node pricing ranges significantly:

Dedicated node pricing typically runs $1,500-5,000/month depending on specs and features. Entry-level setups with basic RPC access start around $1,500. High-performance configurations with ShredStream, SWQoS, multi-region redundancy, and 24/7 support reach $5,000+.

What's included vs additional costs:

Free add-ons vary by provider. Jito Shredstream gRPC endpoint is bundled as a free add-on to RPC Fast Solana dedicated node offering. Some providers charge separately for bloXroute BDN access, premium support, or multi-region deployments.

Pricing models matter for HFT:

Request-based pricing (pay per API call) becomes expensive fast at HFT volumes. Flat-fee unlimited RPS plans suit high-frequency operations better. Sending 100,000 transactions per day at $0.001 per request costs $3,000/month on metered plans. Unlimited plans at $2,500/month save money while eliminating overage surprises.

Hidden costs to factor in:

Provider comparison for 2026:

Leading Solana RPC providers each have different strengths:

Evaluation criteria that matter:

Don't choose based on marketing claims. Run at least a 7-14 day benchmark from the regions where you host trading infra. Measure actual latency, landing rates, and uptime during both calm and volatile periods.

Request reference customers running similar HFT operations. Ask about incidents during major network events. Check status pages for historical uptime data. The cheapest provider costs more when it fails during your most profitable trading opportunity.

Building production-grade Solana HFT infrastructure requires systematic execution across multiple phases. Rush the foundation and everything built on top suffers.

Start with solid basics before optimization. Choose a provider offering dedicated nodes in your target region. Enable Yellowstone gRPC for structured data streaming. Implement basic transaction submission and monitoring. Benchmark against public RPC to establish baseline improvement.

Add ShredStream access to your dedicated node. Using direct gRPC subscription to Jito Shredstream endpoint, you will receive transactions ~2 minutes earlier on average compared to Yellowstone gRPC. Run parallel data feeds and measure actual detection time differences for your specific use cases. Build shred decoding pipeline if consuming raw ShredStream data.

Establish relationships with validators offering SWQoS partnerships. 83% first-block hit rate thanks to stake-weighted QoS, with transactions confirming in the first block, outperforming standard RPCs. Configure both validator and RPC sides with proper flags and verification. Test during network congestion to validate priority access.

Deploy parallel transaction submission across 3-5 endpoints including Jito Block Engine. Implement bundle construction for atomic multi-step strategies. Add intelligent failover logic with health monitoring. Test tip calculation formulas for different market conditions.

Monitor performance metrics daily. Tune priority fees based on network conditions. Expand to additional geographic regions as trading volume grows. Optimize compute unit requests as transaction patterns evolve. Review and update validator partnerships quarterly.

Dysnix has been powering HFT operations on Solana since the network's early days. Our infrastructure delivers sub-4ms latency, 83% first-block hit rates, and 99.99% uptime through bare-metal servers colocated with validator constellations. We handle the complexity of ShredStream integration, stake-weighted QoS configuration, and continuous performance optimization, so your team can focus on trading strategies rather than infrastructure debugging.

What we provide:

Every millisecond counts in HFT. Don't waste months building infrastructure from scratch when production-ready solutions exist.

Ready to eliminate infrastructure as your bottleneck? Contact us for a free technical consultation. We'll review your current setup, identify performance gaps, and design an infrastructure roadmap that actually delivers the speed your strategies deserve.

Visit our blog or reach out directly to discuss your requirements.