By January 2026, Solana had established itself with a market cap of roughly $70 billion. The network's trading landscape is dominated by automated systems, with manual traders representing a minor fraction of total activity. Sniper bots continuously monitor for fresh liquidity deployments, arbitrage windows, and mispriced tokens, executing rapid buys to capture early momentum.

More than 70% of Solana sniping bots prioritize minimizing RPC latency below 50ms for faster transaction propagation. However, only approximately 10% achieve sustainable profitability—sometimes scaling to millions annually—by implementing comprehensive strategies beyond pure speed optimization. The difference often comes down to infrastructure quality: dedicated RPC providers like RPC Fast have become essential for teams serious about consistent sub-50ms performance at scale.

This technical blueprint outlines the complete infrastructure for competitive sniper operations. Positioning among the best Solana sniper bots in 2026 requires more than just low latency. We'll cover event monitoring architectures, RPC configuration with code examples, risk parameters including slippage controls, and 2026 benchmark data.

We'll walk through detailed implementation steps, real-world performance metrics, and Jito MEV protection integration for building robust Solana trading bots.

Solana sniper bots are automated trading systems engineered to exploit the network's high throughput, focusing on newly launched tokens where valuations can multiply 10-100x within minutes.

Fundamentally, these systems function as automated market monitors: they track on-chain events—pool creation or liquidity additions—on decentralized exchanges like Raydium or Pump.fun, then place buy orders milliseconds before retail participants arrive.

The business case? Throughout 2025, Solana's DEX ecosystem processed $1.2 trillion in annual volume, with 40% connected to new token launches—yet manual traders captured under 5% of early-stage opportunities, according to QuickNode performance data. Whether you're a degen hunting meme coins, an arbitrage specialist, or an institutional desk managing launch exposure, sniper automation creates systematic advantages. Without proper engineering, though, it's capital destruction: 90% of amateur implementations fail due to latency issues or inadequate rug detection, as documented in developer Medium retrospectives.

The operational flow is a time-critical pipeline: detect → analyze → execute → exit. Elite implementations complete this entire cycle in under 150ms, otherwise you're buying at 3-5x floor prices during the rush. Missing a single slot (Solana's ~400ms block time) increases opportunity costs by 15-25%, based on Dune Analytics tracking across 10K+ launches.

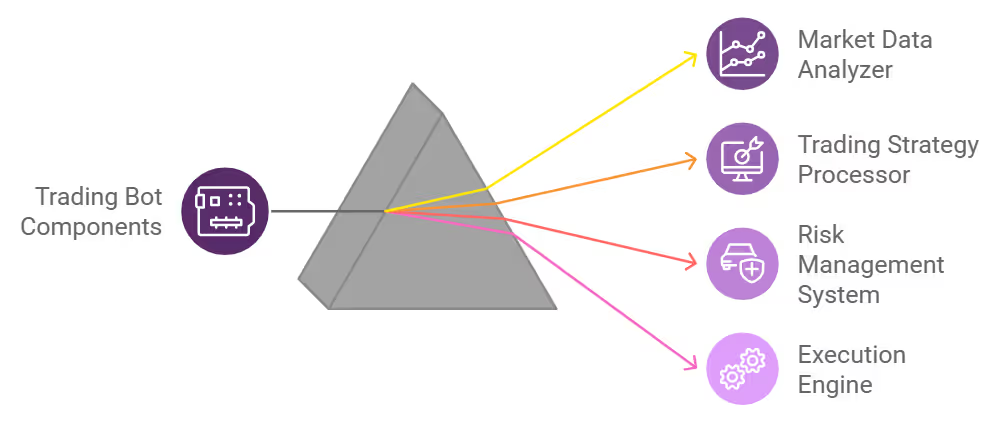

Each component builds upon the previous layer, creating an execution chain limited by its weakest element.

Here's what each module does, including recommended tools, configuration parameters, and their importance—informed by production 2026 deployments achieving minimal failure rates.

Continuously scans blockchain state for triggering conditions. Implements WebSocket subscriptions or Geyser plugins monitoring AMM program logs, such as Raydium's program ID for pool initialization or Pump.fun's bonding curve events. Critical parameters: Event filtering logic, polling intervals of 20-50ms. Advanced implementations like Geyser reduce detection latency, capturing more opportunities during volume spikes. Without this layer, you're operating blind.

Evaluates detected opportunities to filter low-quality launches (majority of launches fail quickly, per Birdeye data). Performs on-chain validation (liquidity exceeding $10K, limited developer token holdings) and off-chain analysis (social sentiment via Birdeye API). Generates composite scoring for opportunity ranking. This filtering eliminates most false positives, improving win rates.

Constructs and broadcasts swap transactions. Leverages Jupiter aggregator for optimal routing, configuring tight slippage parameters (0.3-0.5%) and compute fees for transaction prioritization. Bundles transactions via Jito for MEV protection. Includes simulation and retry mechanisms for reliability. Ensures fast trade execution while protecting entry prices.

Monitors holdings and executes profit-taking to secure gains. Uses DexScreener feeds to configure sell targets at 2-3x or stop-losses at -15%. Implements wallet rotation and time-based exits for security. Preserves more profits than passive holding strategies.

Critical consideration: Single data source dependency creates vulnerability—diversify feeds or accept 20% false positive rates. Think of it as precision engineering: one miscalibration and the entire system fails. With this architectural foundation established, you're ready to build the actual stack.

Next section: Data ingestion infrastructure, the fuel powering the operation.

You understand the architectural components—now let's implement the data infrastructure that brings it to life.

This foundational layer handles real-time blockchain event capture and contextual data enrichment, enabling your sniper to make informed decisions rather than blind reactions. Without reliable ingestion, the entire system degrades: events get missed, decisions lag, and opportunities become losses.

The objective is establishing continuous information flow, processed quickly enough to maintain sub-200-millisecond cycles from detection to execution.

Consider this building a specialized blockchain news feed: establish core event streams, then layer on filters and enrichment to eliminate noise.

Start with fundamental subscriptions capturing on-chain triggers—new liquidity pools or token deployments. Utilize Solana's web3.js library for connection management and program log monitoring.

Here's a foundational TypeScript implementation for subscription and parsing:

import { Connection } from '@solana/web3.js';

const connection = new Connection('wss://your-endpoint-provider.com');

connection.onLogs(

undefined, // Unfiltered subscription; refine with program filters

(logs) => {

if (logs.logs.some(log => log.includes('pool creation event'))) {

const eventData = parseTokenMintFromLogs(logs); // Custom extraction logic

addEventToQueue(eventData); // Pass to analysis module

}

},

'confirmed'

);

This establishes monitoring for potential opportunities without system overload—use "confirmed" commitment for stability, and implement instruction type filtering to reduce irrelevant data.

For reduced latency, migrate to Geyser gRPC endpoints from providers like Triton One. These deliver raw updates pre-confirmation, providing timing advantages. Limit concurrent streams to prevent resource exhaustion, and integrate ShredStream if your provider offers it for pre-block visibility.

After event detection, enrich with off-chain context. Query APIs like Birdeye for rapid social traction or holder distribution checks—invoke only when necessary for efficiency. This transforms raw logs into scored opportunities, helping your analysis module eliminate obvious scams.

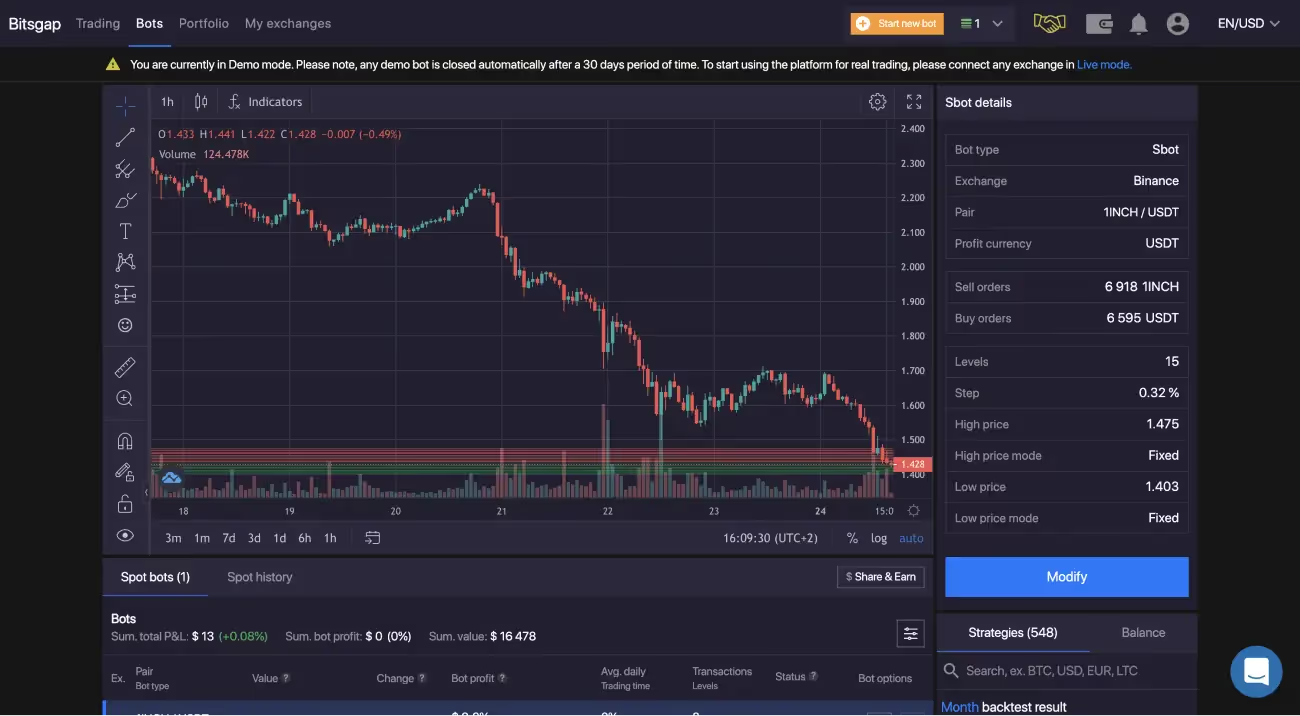

Data flows are established—now you need bulletproof network connectivity to act on intelligence. This RPC layer functions as the bot's nervous system: it queries blockchain state, retrieves blockhashes, and broadcasts transactions reliably. Public endpoints suffice for development, but under production load, they fail, introducing delays that cost slots and competitive edge. Advanced Solana sniper bots rely on Private RPCs from providers like Helius or self-hosted infrastructure to eliminate this bottleneck, targeting consistent latency under 100ms with near-zero packet loss—this is where sniper bot technology separates winners from losers.

This configuration connects directly to your event monitoring: use identical providers for seamless integration, with parameters tuned for Solana-specific behaviors like leader scheduling. Prioritize geographic co-location (e.g., Frankfurt data centers) and features like dynamic priority fee estimation for transaction prioritization.

Select dedicated nodes over shared infrastructure—prioritize ShredStream compatibility and Jito integration. Here's a TypeScript connection configuration for your bot:

import { Connection } from '@solana/web3.js';

const rpcUrl = 'https://your-dedicated-rpc.solana-mainnet.quiknode.pro/';

const connection = new Connection(rpcUrl, {

commitment: 'processed', // Lower latency than 'confirmed' for sniping

wsEndpoint: 'wss://your-websocket-endpoint.com', // Pair with WebSocket for events

});

// Latency test: await connection.getSlot(); // Target <50ms responseThis maintains lean query patterns—utilize 'processed' commitment for speed, but pre-simulate transactions to catch failures.

Enable automatic fee calculation via RPC's compute budget API, setting baseline units around 200K for complex swaps. Implement retry logic for network congestion: exponential backoff with three-attempt maximum. For MEV mitigation, integrate Jito's client early—bundle your transaction with validator tips.

Your data pipeline is robust and RPC connectivity is optimized—now comes the critical moment: building and submitting trades that execute successfully. This layer manages the complexity of transaction assembly, pre-flight simulation, and network broadcast, ensuring your bot doesn't just identify opportunities but captures them efficiently. In Solana's competitive mempool environment where transactions compete for inclusion, raw speed without intelligent construction results in 30% higher failure rates during congestion (Jito 2026 operational logs). Target under 100ms from construction to broadcast, using routing aggregators and transaction bundlers for efficient execution and MEV protection.

Integrate this directly with your RPC connection—fetch current blockhashes and simulate before transmission. Providers with native Jito integration streamline this process, prioritizing bundles for next-slot inclusion.

Leverage Jupiter V6 for cross-DEX routing optimization, requesting quotes for SOL amounts against target token mints. Configure tight slippage tolerance (0.3%) to prevent overpayment, and allocate compute units (200K) for smooth execution.

Here's a Rust implementation for transaction construction and signing:

use solana_sdk::{transaction::Transaction, pubkey::Pubkey};

use jupiter_swap_api::Jupiter; // Aggregator integration crate

async fn construct_swap(

jupiter: &Jupiter,

input_mint: Pubkey,

amount: u64

) -> Result<Transaction, Box<dyn std::error::Error>> {

let quote = jupiter

.get_quote(input_mint, output_mint, amount, 30)

.await?; // 0.3% slippage

let swap_instruction = jupiter.swap_instruction("e).await?;

let recent_blockhash = rpc.get_latest_blockhash().await?;

let tx = Transaction::new_unsigned(swap_instruction)

.sign(&[&keypair], recent_blockhash);

Ok(tx)

}This maintains atomic operations—always pre-simulate via RPC to detect failures like insufficient liquidity.

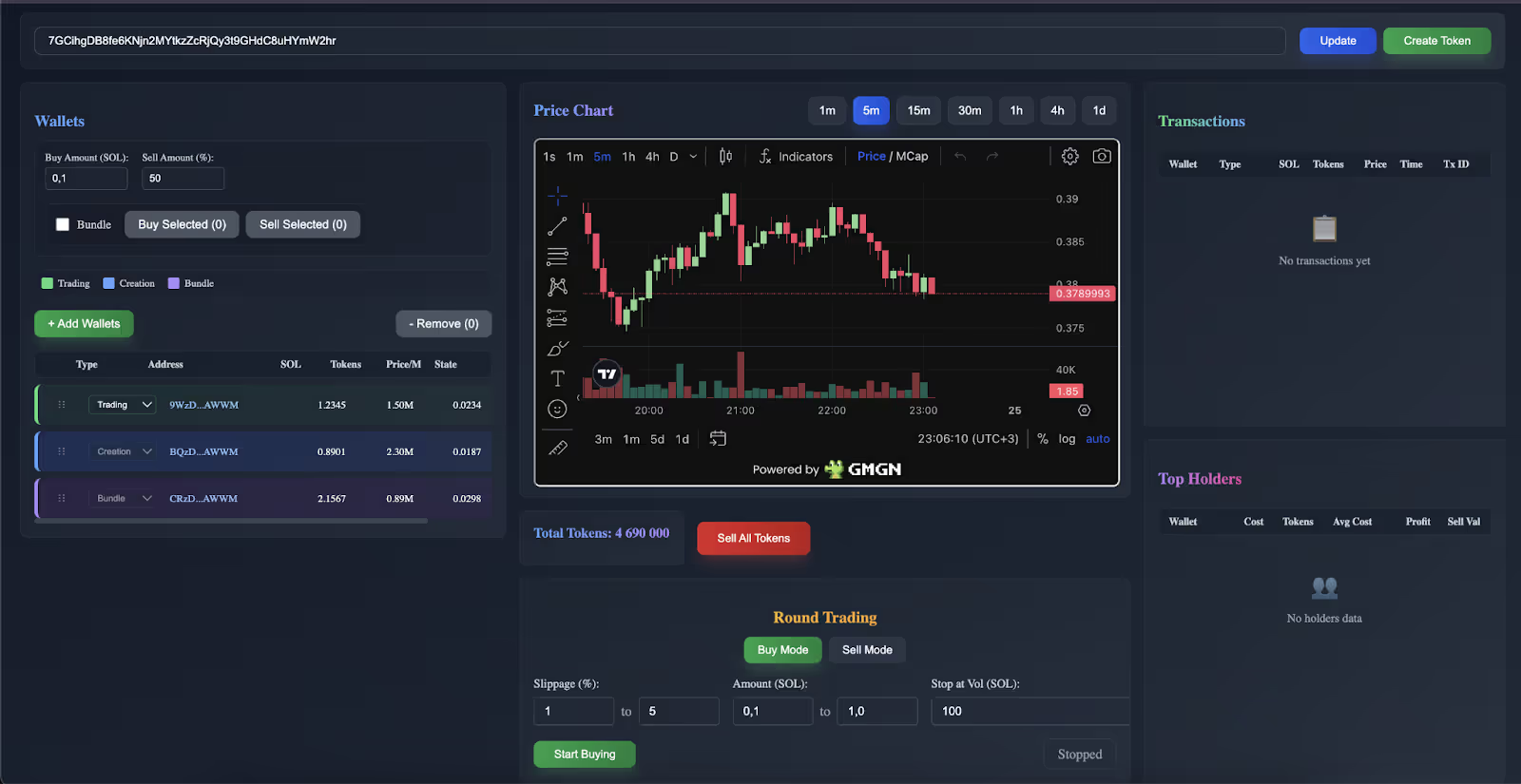

Trades are executing—now implement wallet infrastructure and risk rules that protect operations. This layer handles fund management, enforces position limits, and monitors threats like rug pulls or sandwich attacks, preventing single failures from destroying capital. Without these controls, even perfect execution bleeds capital: unprotected positions cause 50% of sniper failures annually. Rotate across 5-10 keypairs, size positions at 1-2% of capital, and monitor continuously.

Connect to execution layer by dynamically injecting keypairs and querying balances post-transaction. Tools like Solflare APIs or hardware wallet integrations add security without degrading performance.

Distribute funds across hot wallets (software-based) and cold storage (hardware for major holdings). Store keys in environment variables—never hardcode credentials. Post-execution, rotate wallets to avoid DEX pattern detection.

TypeScript example for balance monitoring:

import { Keypair, PublicKey } from '@solana/web3.js';

async function monitorBalanceAndRotate(

connection: Connection,

walletPubkey: PublicKey

) {

const balance = await connection.getBalance(walletPubkey);

if (balance < minimum_threshold) { // e.g., 0.1 SOL

switchToNextWallet(); // Cycle to different keypair from pool

}

return balance;

}This maintains operational liquidity—target under 1% capital exposure per keypair.

Your infrastructure is complete—now refine through systematic testing and optimization to handle Solana's production environment. This phase transforms a functional prototype into a consistent performer, identifying edge cases like volume spikes or false signals that eliminate 70% of amateur bots (developer forum analysis). Focus on historical backtesting, key performance indicators, and live simulation to benchmark against competition. The result? Adaptive systems that deliver, not just respond, pushing ROI from breakeven to sustainable profitability.

Start conservatively: Replay historical events offline, then stress-test in simulation mode. Resources like Solana's blockchain explorer archives or Dune queries provide datasets from previous quarters, enabling iteration without capital risk.

Extract event logs from sources like Solana Explorer (target 5K-10K launches over a quarter) and process through your pipeline. Simulate complete cycles—detection through exit—using historical timestamps to replicate production timing.

Python backtesting framework example:

import json

from solana.rpc.api import Client # For historical replay

def backtest_event_pipeline(events_file, bot_pipeline):

with open(events_file, 'r') as f:

events = json.load(f) # Load historical event logs

results = []

for event in events:

timestamp = event['slot_time']

# Freeze time context, execute detection → analysis → execution

outcome = bot_pipeline.process_event(event, timestamp)

results.append(outcome) # Record success/failure, P&L

return compute_metrics(results) # Calculate performance indicatorsAdjust filtering rules or fee parameters based on replay results—e.g., strengthen social validation thresholds if rug pulls increase. Execute 50-100 iterations per dataset to smooth statistical noise.

Monitor these metrics to assess system health—establish baselines aligned with your risk parameters.

Success Rate: Percentage of processed events resulting in profitable trades (target >60% after filtering). Log by component to identify bottlenecks.

Return per Batch: Net returns across 50-100 trades (aim for 10-20% average, including fees). Segment by market conditions.

Latency Distribution: Histograms of cycle timings (e.g., detection <50ms, complete cycle <200ms). Visualize with tools like Grafana.

| Metric | Target | Measurement Method |

|---|---|---|

| Success Rate | >60% | Profitable Trades / Total Events |

| Return/Batch | 10–20% | (Gains − Fees) / Capital |

| Avg Latency | <200ms | Timestamp differential analysis |

Bringing it all together: Your production-ready bot

You now have the complete technical blueprint: a modular infrastructure that transforms basic speed into a comprehensive, resilient system for Solana sniping. It's engineered to handle the network's dynamics—whether quiet launches or high-volume frenzies.

Start incrementally: Focus on a single DEX for monitoring, validate your data feeds with limited events, and scale as confidence grows. Optimize based on telemetry—what's your actual end-to-end latency? Which filters eliminate the most noise? This iterative methodology keeps operations lean while you understand the system's behavior.

Looking toward 2026, competitive sniper operations require integrating ShredStream capabilities with Jito bundles in geographically co-located nodes delivering sub-40ms performance without operational complexity.

The foundation of any competitive sniper operation is the RPC layer.

RPC Fast provides dedicated Solana nodes with the latency guarantees and priority fee passthrough that separate winning bots from expensive experiments—visit them to configure your infrastructure for whatever opportunities 2026 presents.