Most seed+ teams treat Web3 infrastructure as a utility bill—something you pay for, plug in, and ignore until it breaks. This approach works for a prototype. It fails for a business.

When a project moves from "building" to "scaling," the infrastructure requirements shift from simple connectivity to complex systems engineering. If you are managing a DeFi protocol, an HFT desk, or a wallet, your infrastructure is no longer a support function. It is your product’s performance ceiling.

Before picking a chain or a provider, most teams miss three critical operational realities that eventually drain runway and engineering focus.

The hidden ops tax

"Just using an RPC" is a temporary state. As your traffic grows, you don't just need more requests; you need observability, rate-limit management, and failover logic. Without a plan, you eventually dedicate two senior engineers just to keeping the data flowing. This is "ops debt," and it is more expensive than your cloud bill.

The retry storm and amplification

In Web3, when a system slows down, clients don't wait—they retry. A 10% increase in latency can trigger a 500% increase in request volume as bots and wallets hammer your endpoints. If your infrastructure isn't designed for burst isolation, a minor upstream hiccup becomes a total system collapse.

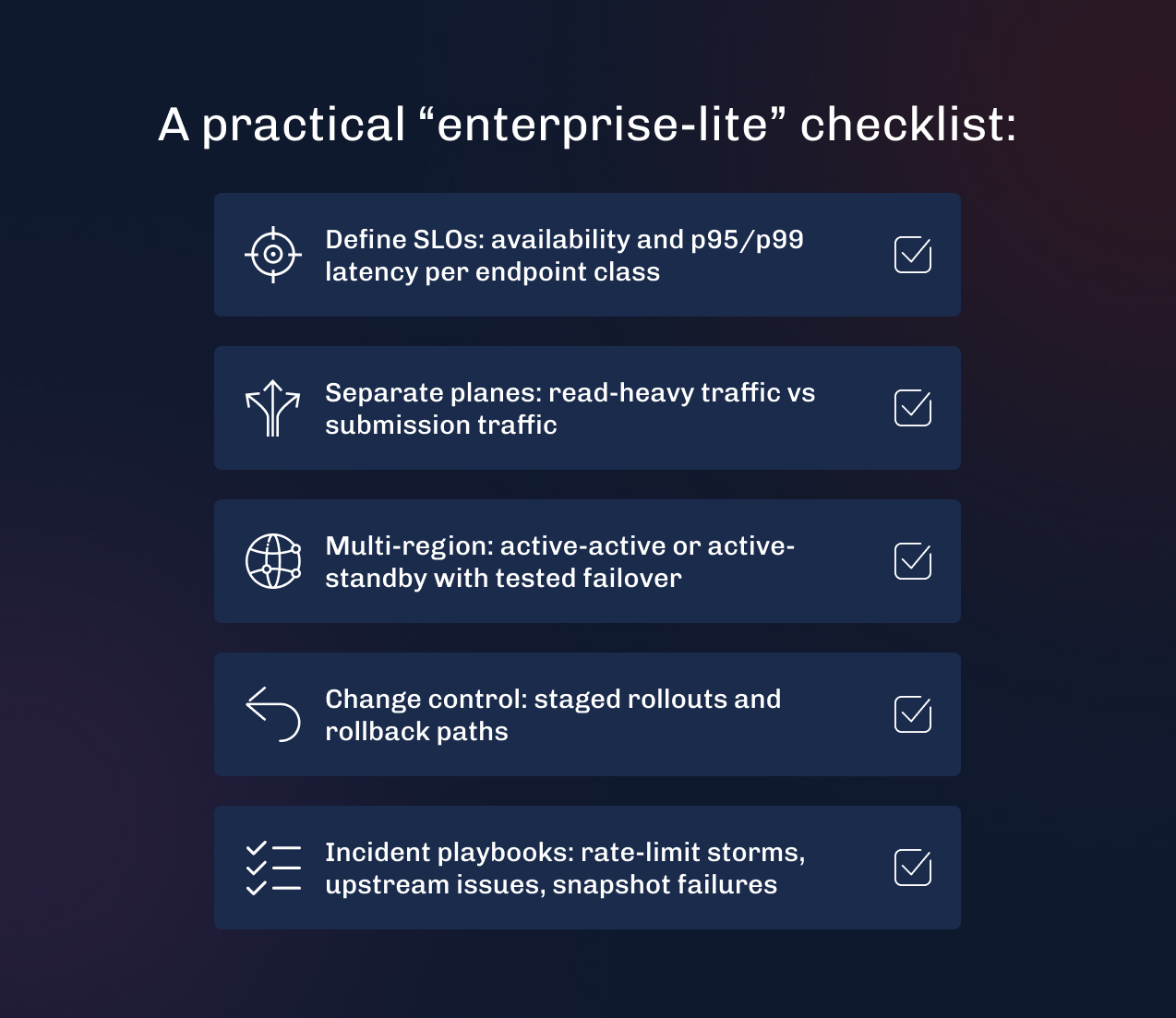

The "Enterprise-lite" transition

There is a specific moment when a startup becomes an enterprise. It isn't about headcount; it's about the cost of downtime. When a 15-minute RPC brownout costs $50k in lost trading volume or user trust, you have entered the enterprise phase.

At this point, you need multi-region high availability (HA), avoid public endpoints, use incident playbooks, and maintain predictable cost models.

Public endpoints optimize for broad access, not your workload. When you scale, you typically see one of these:

If your business depends on execution quality or user swaps, you want infrastructure shaped around your traffic, not the median developer’s traffic.

To see these principles in action, we look at Solana. We use Solana as our primary example because its high-throughput, low-latency nature forces you to solve the hardest infrastructure problems immediately. If you can build stable, enterprise-grade infrastructure here, you can build it anywhere.

On Solana, the diversity of workloads—from HFT searchers to consumer wallets—requires a specialized approach to the "data plane."

On Solana, a single RPC URL is a bottleneck. High-performance teams split their infrastructure according to the nature of the work:

If you poll, you pay twice:

For trading and real-time DEX backend workloads, streaming reduces both tail latency and infrastructure waste. In the Solana ecosystem, gRPC-style streaming is widely used for real-time consumption. Your provider’s docs should spell out the supported streaming interfaces and operational constraints.

RPC Fast addresses these needs through auto-scalable, geo-distributed global infrastructure and dedicated nodes, as detailed in the documentation. Also, check up both Jito Shredstream gRPC and Yellowstone gRPC in its Solana section.

Infrastructure choices directly impact execution quality. In the Solana ecosystem, block-building dynamics and private orderflow mean that where your node sits—and how it talks to the network—determines your PnL.

If your infrastructure is "noisy," your transactions land late. High-load teams often move toward self-hosted clusters or dedicated environments to isolate their traffic. For example, the Kolibrio case study highlights a design using Kubernetes and 10 Gb switches to achieve transaction simulations in under 1ms.

Use this table to identify where your infrastructure currently sits and where it needs to go as you scale.

| Workload | Critical metric | The seed approach | The enterprise upgrade |

|---|---|---|---|

| Trading / MEV | p99 Latency | Shared RPC | Dedicated nodes + gRPC streams |

| DEX / DeFi | Data Freshness | Public endpoints | Slot-aware caching + HA clusters |

| Wallets | Global Uptime | Single provider | Multi-region + provider redundancy |

| Analytics | Sync Speed | Standard nodes | Archive nodes + ETL pipelines |

| Validator Ops | Security / Uptime | Manual setup | Sentry patterns + K8s automation |

Infrastructure isn't about "better" or "faster" in the abstract. It is about measurable outcomes. When evaluating your stack or a potential partner, look for hard data on cost and performance. Dysnix has documented several transitions from "unstable" to "enterprise-grade" with clear metrics:

Achieved a 70% reduction in infrastructure costs while stabilizing a DEX handling 158 billion requests per month.

Nansen

Reduced archive node update times from 3 hours to 15 minutes, ensuring their analytics platform stayed ahead of market moves.

For seed+ teams, the goal is to build infrastructure that stays out of the way of the product roadmap. This requires moving away from "black box" RPC providers and toward a transparent, workload-aware architecture.