Kubernetes has become the backbone of modern infrastructure, but running it manually is still a war zone of YAML chaos, networking bugs, and CrashLoopBackOff nightmares. Managed Kubernetes providers exist to take away that pain—automating the control plane, upgrades, monitoring, and scaling so teams can focus on shipping real apps instead of debugging kube-proxy at 2 a.m.

But not every Kubernetes company gets it right. Some platforms are intuitive and built for developers. Others are bloated with enterprise checklists but fail the “can I deploy before my coffee cools?” test.

This guide cuts through the noise. We’ll compare the top managed Kubernetes platforms in 2026, show you who they’re best for, and help you choose the right enterprise Kubernetes platform for your team.

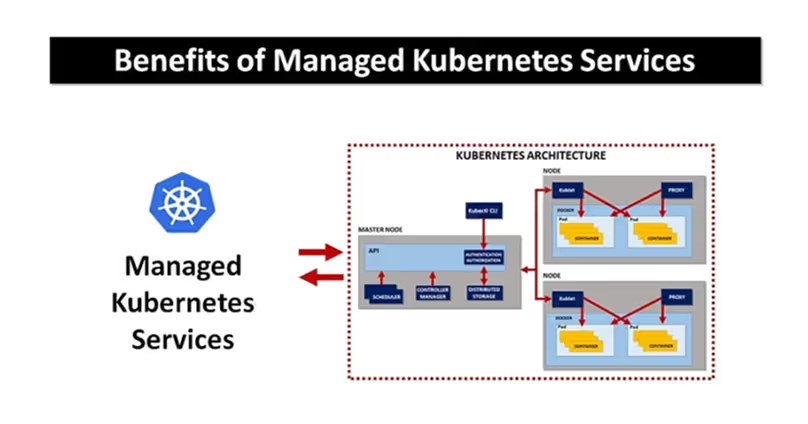

Managed Kubernetes providers (also known as Kubernetes platforms or managed Kubernetes services) are companies or cloud platforms that take responsibility for operating your cluster infrastructure, so you don’t have to.

Instead of wrestling with master nodes, patching control planes, or wiring up storage and networking yourself, they provide a turnkey Kubernetes experience.

At a minimum, a managed Kubernetes provider typically offers:

Due to these integrated capabilities, managed Kubernetes has captured a dominant market share, with approximately 63% of Kubernetes deployments today utilizing managed services.

The managed Kubernetes service market is experiencing rapid growth. In 2024, it was valued at approximately $2.11 billion, and analysts expect it to reach $14.61 billion by 2033, with a 24% CAGR.

Choosing a managed Kubernetes provider means trading control for convenience, but done right, it reduces your total cost of ownership (TCO)—some providers claim up to 40% lower TCO by offloading patching, scaling, and security.

In short: a managed Kubernetes provider is a Kubernetes company or platform that runs the infrastructure for you—control plane, nodes, networking, security—so your team can build and deliver, not fight Kubernetes plumbing.

Managed Kubernetes makes sense for teams that want to run real workloads without constantly managing clusters. A managed Kubernetes firm helps various groups derive value in multiple ways.

A startup racing to deliver features doesn’t have weeks to tune kube-proxy or debug CrashLoopBackOff pods. Managed providers handle cluster provisioning, autoscaling groups, and control plane upgrades in the background. For SaaS teams with spiky workloads, this means a smoother user experience during peak hours, without the need for manual node scaling at midnight.

Enterprises run sensitive workloads—fintech apps under PCI DSS, healthcare apps under HIPAA, or workloads bound by GDPR. A managed service provides baseline security hardening: encrypted etcd storage, pod-level network isolation with Cilium or Calico, IAM and RBAC integration, and compliance-ready logging pipelines. Instead of reinventing security controls, enterprises inherit a foundation that satisfies both engineering and audit teams.

Cluster ops is noisy: patching worker nodes, monitoring API server latency, handling kernel CVEs. For a team with just one or two SREs, this burns capacity fast. Managed Kubernetes automates node lifecycle management, surfaces metrics through built-in monitoring, and patches control planes within SLA windows. Engineers redirect their time to improving uptime and tuning app performance rather than babysitting kubelets.

Running clusters across AWS, GCP, and Azure means different IAM models, different ingress controllers, and different upgrade cadences. Managed providers reduce this friction with unified APIs, consistent Kubernetes versions, and integrations for fleet management. With GitOps (Argo CD, Flux), teams push the same manifests across providers while relying on the platform to handle version drift and rollout safety.

Infrastructure as Code (IaC) is now standard, and managed Kubernetes fits right in. Most platforms ship with Terraform and Pulumi modules, CI/CD integrations for GitHub Actions or GitLab CI, and autoscaling hooks for KEDA or Vertical Pod Autoscaler. Instead of building glue code, teams apply their GitOps pipelines directly and scale clusters as easily as scaling app deployments.

Some providers focus on developer speed and simplicity, while others deliver enterprise-grade scale, compliance, and automation. Below, we break down the top 10 Kubernetes platforms you should know about this year.

Dysnix positions itself as a managed Kubernetes company for seed+ and high-growth businesses that need fast deployment, cost savings, and scalability without trading off reliability. Instead of offering a “one-size-fits-all” cluster, Dysnix builds bespoke Kubernetes architectures designed around each project’s workload, compliance needs, and growth path.

Key strengths:

Dysnix delivers managed Kubernetes as a structured journey. It begins with discovery, where engineers assess current infrastructure and applications to identify bottlenecks and requirements. Based on this, they design a custom Kubernetes architecture aligned with the client’s business and technical goals. Deployment follows, with networking, security policies, and monitoring baked in. If needed, legacy workloads are migrated seamlessly into the new cluster.

The engagement doesn’t stop there: Dysnix continues with optimization to improve performance and cost efficiency, then maintains and monitors clusters to ensure they stay reliable over time.

As Daniel Yavorovych, Co-Founder & CTO at Dysnix, puts it: “When clients hire us, they don’t just get one DevOps engineer. They get the strength of our whole team, proven methods, and the confidence that their Kubernetes environment will scale reliably and cost-efficiently.”

Google Kubernetes Engine is one of the most mature and widely adopted managed Kubernetes platforms. It handles the entire control plane, including the API server and etcd, with automatic upgrades and high availability built in. This gives teams confidence that clusters remain secure and stable without constant manual intervention.

GKE operates in two main modes. In Standard, engineering teams manage node pools, machine types, and scaling policies. In Autopilot, Google takes full responsibility for nodes, and customers only pay for the resources their pods consume. This flexibility allows companies to balance control and convenience depending on their needs.

The platform integrates deeply with Google Cloud services, offering built-in observability through Cloud Monitoring and Logging, identity management with IAM, and seamless networking via VPC. Fleet management tools allow organizations to run multi-region or multi-cluster environments with consistent policies.

On the pricing side, GKE charges a flat fee per control plane in Standard mode, while Autopilot shifts the cost model toward resource-based billing. This makes it attractive for teams that want predictable operations without over-provisioning nodes.

Strengths:

Limitations:

Amazon EKS provides a fully managed Kubernetes control plane with native integration into the AWS ecosystem. The service manages cluster availability, upgrades, and security patches, allowing DevOps teams to focus on workloads instead of control plane operations.

EKS offers multiple compute options. Clusters can run on standard EC2 instances, in managed node groups with automated lifecycle handling, or in Fargate, where pods run serverlessly without the need to manage nodes at all. This range of options gives teams flexibility to optimize costs and performance.

One of EKS’s strongest features is its identity and access model. With IAM Roles for Service Accounts (IRSA), Kubernetes service accounts can assume fine-grained AWS IAM roles, tightly controlling access to S3, DynamoDB, or any other AWS service. This is critical for workloads with strict security and compliance requirements.

EKS ties neatly into other AWS services: Elastic Load Balancers for ingress, EBS and EFS for storage, CloudWatch for logging and metrics, and WAF or Shield for enhanced security. For teams already invested in AWS, this integration makes EKS a natural fit.

From a cost perspective, EKS charges a flat fee for each control plane plus the underlying compute, storage, and networking resources. While this provides predictable billing, poorly optimized clusters can drive up costs quickly.

Strengths:

Limitations:

Azure Kubernetes Service is Microsoft’s managed Kubernetes platform, designed with strong integration into the broader Azure ecosystem. It automates the control plane and node lifecycle, handling upgrades, patches, and monitoring so teams can focus on workloads.

AKS stands out for identity and governance. Native integration with Azure Active Directory makes role-based access straightforward, and Azure Policy enables organizations to enforce compliance rules across clusters. Built-in monitoring through Azure Monitor and Application Insights provides visibility without extra configuration.

For developers, AKS offers predictable scaling and streamlined deployment with Terraform modules and GitHub Actions integration. Enterprises value its security-first approach, with private clusters, managed identities, and integration with Microsoft Defender for Cloud.

Strengths:

Limitations:

DigitalOcean Kubernetes, or DOKS, brings Kubernetes to startups and small teams that want simplicity without sacrificing scalability. The service abstracts away most cluster complexity, delivering a clean developer experience that feels closer to Heroku but with Kubernetes power under the hood.

DOKS integrates smoothly with DigitalOcean’s ecosystem: block storage, managed databases, and load balancers. Clusters come with monitoring and logging options, and teams can plug in their own tools like Prometheus or Grafana. With clear Terraform support and a straightforward UI, it’s accessible even for developers new to Kubernetes.

The pricing model is one of its strongest points: no extra charges for control plane management, with costs tied directly to the underlying compute and storage resources. This transparency makes it attractive for early-stage companies that need predictable bills.

Strengths:

Limitations:

Civo is a managed Kubernetes provider built on the lightweight K3s distribution. Its biggest draw is speed: clusters can be ready in under two minutes, making it one of the fastest platforms for developers who value quick iteration. The lightweight footprint doesn’t compromise on features—Civo still provides autoscaling, load balancing, and persistent storage.

Developers benefit from a CLI-first approach, clean Terraform modules, and ready-to-use GitOps integrations. Out of the box, clusters include Prometheus and Traefik, so monitoring and ingress are available from day one. Civo is especially popular with startups and small teams that want production-ready Kubernetes without complex setup.

Strengths:

Limitations:

Linode Kubernetes Engine focuses on simplicity and predictable pricing. Designed for indie developers, SMBs, and budget-conscious teams, LKE removes control plane fees entirely—you only pay for the compute and storage resources you use. This makes it attractive for projects where cost transparency is essential.

LKE provides Terraform integration and full API access, so infrastructure can still be managed with automation tools. Monitoring and observability are more of a bring-your-own approach, but integration with Prometheus and Grafana is straightforward. For scaling workloads, node pools and autoscaling are supported, while networking and load balancing remain simple to configure.

The platform doesn’t aim to compete with AWS or GCP on enterprise depth, but for many teams, that’s an advantage: fewer layers of complexity and faster setup.

Strengths:

Limitations:

Vultr’s managed Kubernetes service is designed for developers who want control without unnecessary overhead. Clusters are provisioned quickly with a clean API and strong Terraform support. Unlike some larger providers, Vultr avoids hidden complexity—the experience is closer to raw Kubernetes, but with the control plane, upgrades, and availability handled for you.

Developers can bring their own monitoring stack, like Prometheus and Grafana, or integrate external observability tools. The platform offers straightforward load balancing, block storage, and node pools, but leaves more advanced configurations up to the user. This makes Vultr attractive for technical teams that already know Kubernetes and prefer flexibility over guardrails.

Pricing is transparent, with no additional fees for the control plane. Users only pay for the compute and storage resources they deploy, which makes it appealing for cost-sensitive teams or projects that need full visibility into spend.

Strengths:

Limitations:

Scaleway Kapsule is a managed Kubernetes platform built in Europe, with a focus on developer speed, automation, and data sovereignty. Clusters come with built-in support for Prometheus, logs, and monitoring, so teams have observability from day one. Load balancers, persistent volumes, and object storage are fully integrated, making it easy to deploy real workloads without extra configuration.

One of Scaleway’s defining features is its alignment with EU privacy and regulatory requirements. For teams operating under GDPR or looking for alternatives to US hyperscalers, Kapsule provides an attractive option with data centers in France and across Europe.

Automation is another strength. Terraform integration is built in, and Kapsule works seamlessly with GitOps tools like Argo CD. This makes it a good fit for teams that rely heavily on Infrastructure as Code and want to standardize deployment pipelines across environments.

Pricing is competitive, with clear billing models that avoid hidden costs. Developers can start small and scale clusters predictably, which has made Kapsule popular among startups and mid-sized European companies.

Strengths:

Limitations:

Rancher is a Kubernetes management platform built for teams that need to operate clusters at scale across hybrid or multi-cloud environments. Unlike fully managed cloud offerings, Rancher provides both hosted and self-hosted options, giving organizations freedom to run Kubernetes in the way that best fits their infrastructure strategy, and can be supported by a managed Kubernetes firm.

One of Rancher’s biggest strengths is centralized cluster management. It allows teams to provision, upgrade, and monitor fleets of Kubernetes clusters from a single control plane. This is particularly valuable for enterprises with dozens of clusters spread across AWS, Azure, GCP, and on-premise datacenters. Rancher also supports edge deployments, extending Kubernetes beyond traditional cloud environments.

The platform integrates with Cluster API, Terraform, and popular GitOps tools, making it automation-friendly. It also ships with full observability—logging, monitoring, and alerting—plus strong security features such as role-based access control and pod security policies that can be applied consistently across all managed clusters.

From a developer perspective, Rancher provides a consistent interface to manage workloads regardless of where the cluster is running. For operations teams, it reduces the complexity of maintaining upgrades and compliance policies across distributed environments.

Strengths:

Limitations:

The top platforms each serve different needs: hyperscalers like GKE, EKS, and AKS excel at deep ecosystem integration, developer-focused services like DOKS, and LKE make Kubernetes approachable.

But for companies that need more than an out-of-the-box platform, a partner like Dysnix bridges the gap.

Dysnix delivers managed Kubernetes as a tailored service: optimized for high-load systems, backed by Kubernetes FinOps expertise, and hardened with advanced security practices.

Instead of just providing a cluster, Dysnix designs, deploys, and maintains infrastructure that scales with your business.

If you want Kubernetes to accelerate your growth instead of slowing it down, book a call with Dysnix and see how our engineers can build the right platform for your next stage.