We were engaged to take an aging, open‑source blockchain ETL pipeline and operationalize a Bitcoin‑only dataset for an internal Google customer. The work was primarily operational and systems‑engineering: reuse the existing Blockchain‑ETL components, package them for reliable operation on GCP, harden streaming and daily reconciliation setup so the dataset remains both fresh and consistent, and hand over a reproducible, supportable deployment.

Google reached out for a maintained dataset to replace an abandoned open‑source Bitcoin ETL dataset and provide a new dataset with long‑term operational support. This dataset is the final result of another open source project—Blockchain ETL.

Dysnix had prior involvement with Blockchain ETL components at the very beginning of the project. So we’ve known it from A to Z.

Why not a total rewrite? The original ETL provided the required features and semantics. The shortest path to reliable production was to adapt and operationalize, not rewrite — less risk, faster time‑to‑value.

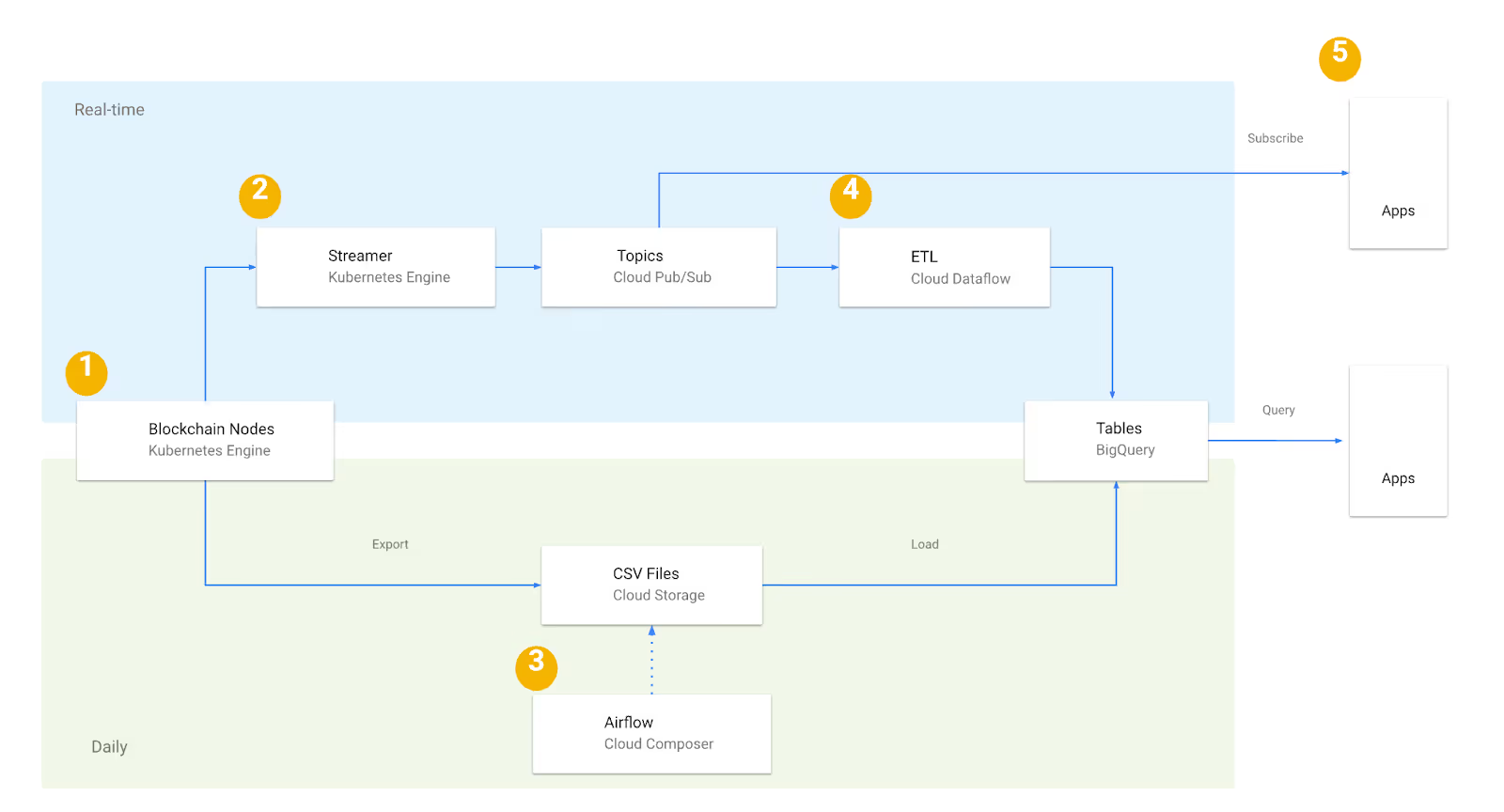

Here’s how the whole solution works:

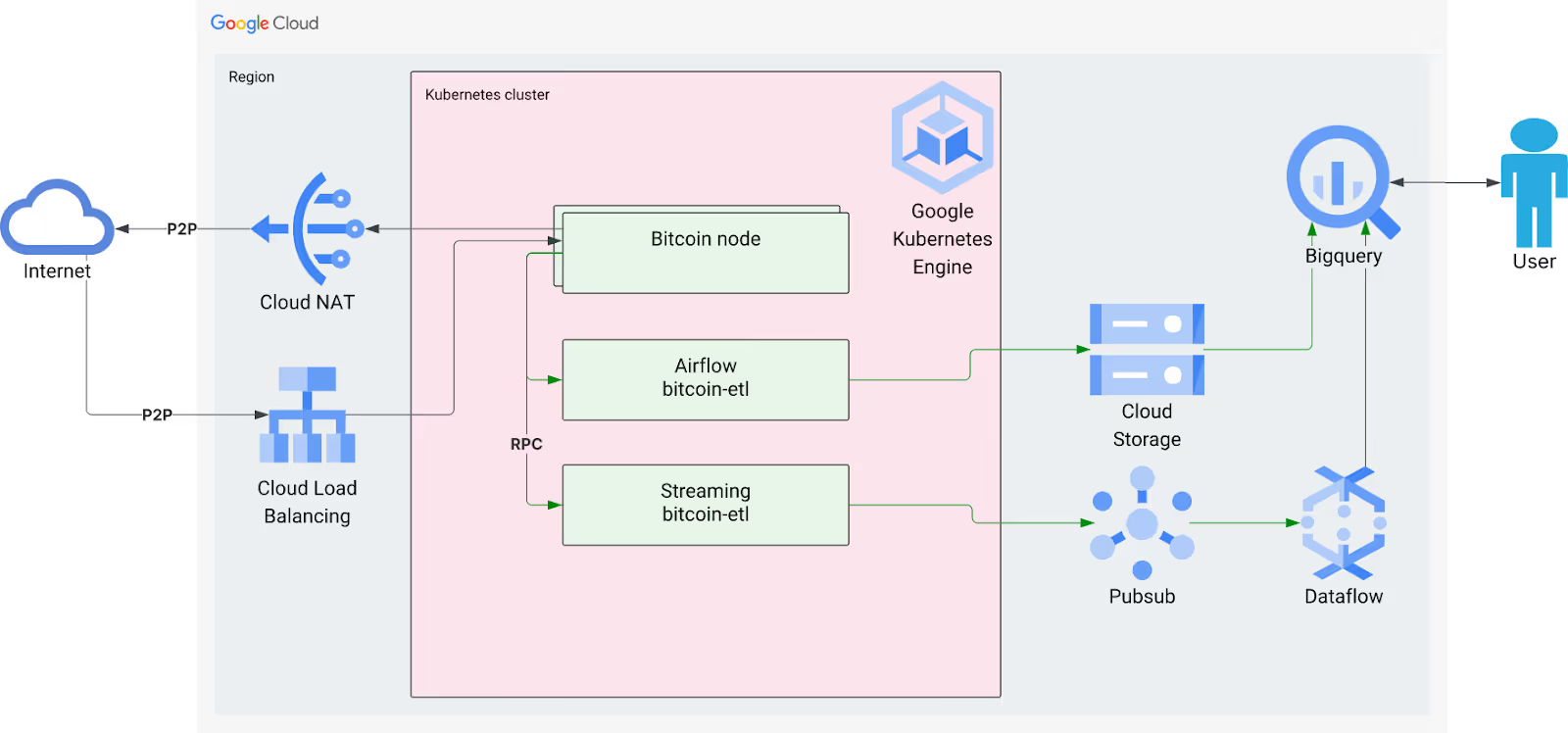

The other architecture layers overview:

| Layer | Technologies | Responsibility |

|---|---|---|

| Compute & Orchestration | GKE (Kubernetes), Helm, Flux CD | Run nodes, ETL, Airflow workers; GitOps deploys |

| Storage & Analytics | GCS, BigQuery | Artifacts, final dataset |

| Streaming | Pub/Sub, Dataflow | Timely updates |

| Orchestration & ETL | Airflow, Blockchain-ETL | Daily backfill + incremental parsing |

| Infra as Code | Terraform, Terragrunt | Provisioning GCP resources, bootstrap Flux CD |

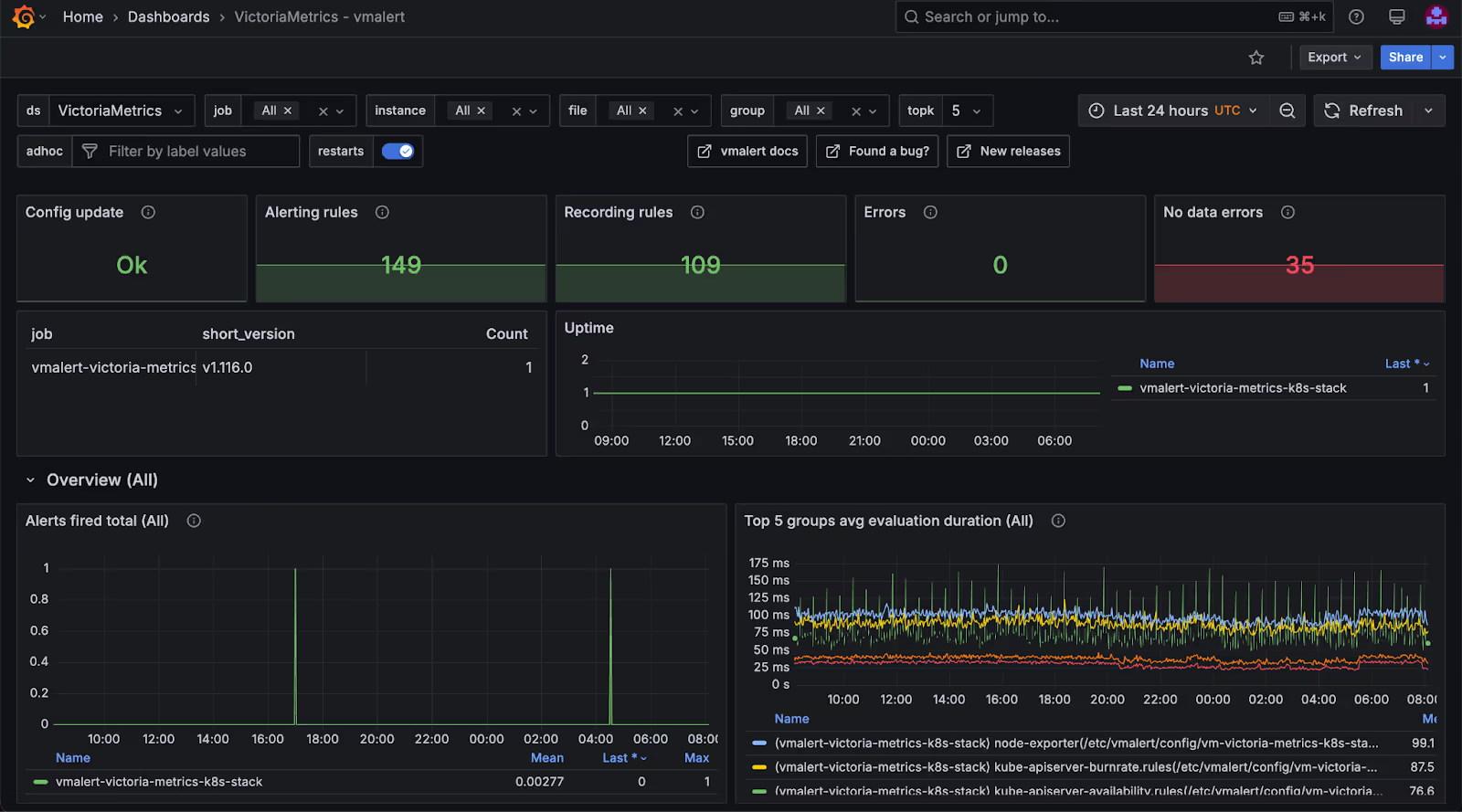

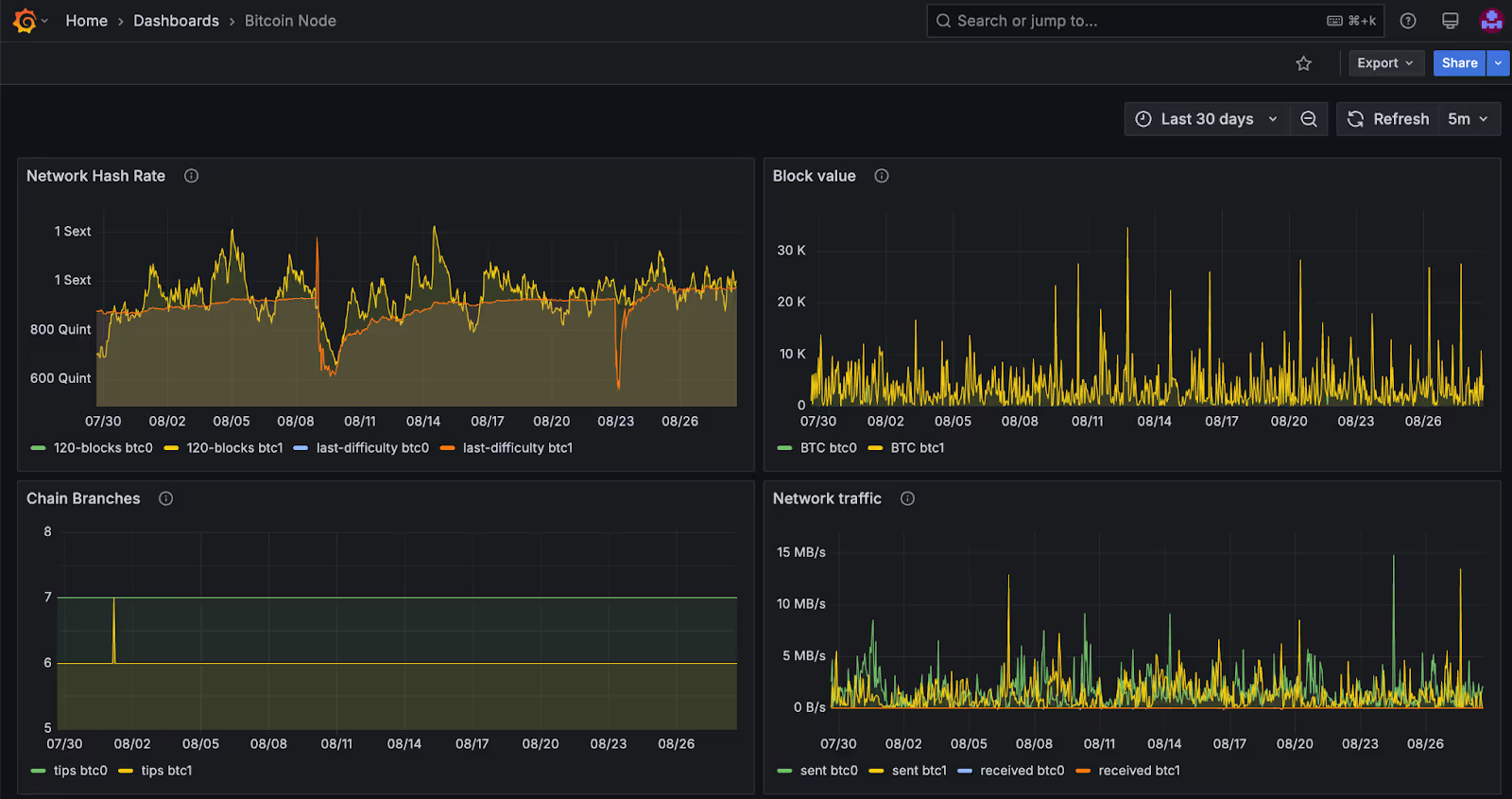

| Observability | VictoriaMetrics, Grafana, Alertmanager | Metrics, dashboards, alerts |

There are a few general points worth mentioning about this architecture:

The paragraphs below provide more details on the technical implementation.

Our ingestion pipeline balances freshness with stability. Streaming updates flow into BigQuery almost in real time through Pub/Sub and Dataflow, keeping the dataset close to the chain tip. But streaming alone can be fragile—short blockchain reorganizations may introduce temporary inconsistencies. To resolve this, a daily Airflow job reconciles the previous day’s data, correcting any transient errors and ensuring long‑term consistency.

To further reduce risk, we never parse the very last blocks immediately. Instead, we deliberately wait on the most recent 2–4 blocks before parsing them. This simple delay guards against orphaned data. The daily job then finalizes the day once the chain is stabilized in 3-4 hours, resulting in a consistent dataset even in the face of reorgs.

Catching up with the entire Bitcoin history, from 2009 onwards, is a separate operational mode. We parallelized the workload into many Airflow jobs and let the cluster autoscale only during that window.. Thus, we caught up in less than 24 hours after Bitcoin nodes were synced.

For infrastructure, we relied on Terraform and Terragrunt, while runtime deployments followed a GitOps model with Flux CD and Helm charts. Treating Kubernetes configuration as code made every change auditable and every environment reproducible—the cluster state always aligns with what’s in Git.

Metrics stack:

Typical alerts we track continuously:

The proper tuning of alerting takes effort and time for testing. But in the end, we’ve got the system that generates only meaningful alerts that require an engineer’s attention, and can handle all the rest following the runbooks. But before that, we had some classic situations like:

Kubernetes liveness probes were too aggressive for rare long intervals between blocks (we saw restarts when block mining paused for ~2h). We tuned the liveness/readiness timeouts to practical thresholds (increased initial probe timeouts) so nodes are not restarted unnecessarily. This reduced flapping and false incidents.

Health checks:

Short glimpse at the developed failure runbooks:

| Failure mode | Likely cause | Mitigation / Runbook |

|---|---|---|

| Airflow’s daily job fails | Data race with streaming / transient errors | Restart job after streaming backlog cleared; investigate logs |

| Pod flapping (nodes) | Aggressive liveness + low block frequency | Increase liveness timeout; add redundancy (2+ node pods) |

| Reorg-induced incorrect entries | Parsing too-recent blocks | Delay parsing of the last N blocks; daily reconciliation |

| Heavy backfill overload | Resource limits during parallel backfill | Autoscale cluster for backfill; throttle parallelism if RPC overloaded |

Custom dashboards:

When tackling projects like this, we found a few key principles consistently paid off:

If the core ETL logic is sound and its semantics are stable, reusing mature, well-understood components is often the most efficient path. This approach significantly saves time and reduces implementation risk compared to a full rewrite.

For any Bitcoin-like blockchain analytics pipeline, anticipating and mitigating reorgs is crucial. Our strategy combined a short, intentional parsing delay for recent blocks with periodic, full reconciliation. This dual approach ensures data accuracy even when the chain experiences temporary forks.

It's vital to distinguish between steady-state streaming and heavy, historical backfill operations. We treated backfill as a controlled, autoscaled process, preventing it from overburdening the continuous streaming pipeline. This separation maintains performance and stability for both, while keeping the dataset data actual for near-realtime updates.

IaC with Terraform/Terragrunt, combined with a GitOps approach using Flux CD, for long-term support and operational transparency, proved invaluable. This makes the entire deployment process readable, auditable, and repeatable, simplifying future maintenance and handovers.

The project took 1 month for a Dysnix DevOps engineer to complete. Now it continues in support mode. Here’s the set of deliverables we provided:

We delivered a low‑risk, operationally robust pipeline that balances freshness and correctness and is reproducible via IaC and GitOps. The outcome gives the client a supported dataset that can be consumed reliably, with clear runbooks and observability to handle real‑world edge cases.

And as the Bitcoin dataset is expected to run continuously, the real value comes from maintaining its stability, reacting to operational issues, and scaling improvements as needed.

Beyond initial delivery, our team will continue to provide operational support, monitoring, and incremental improvements to ensure the dataset performs reliably. This includes troubleshooting incidents, adapting the stack to evolving Google Cloud features, and keeping the deployment aligned with best practices over time.